This section in Pyplan let us automatically validate that our applications, interfaces, and nodes behave as expected.

¶ Automation test manager

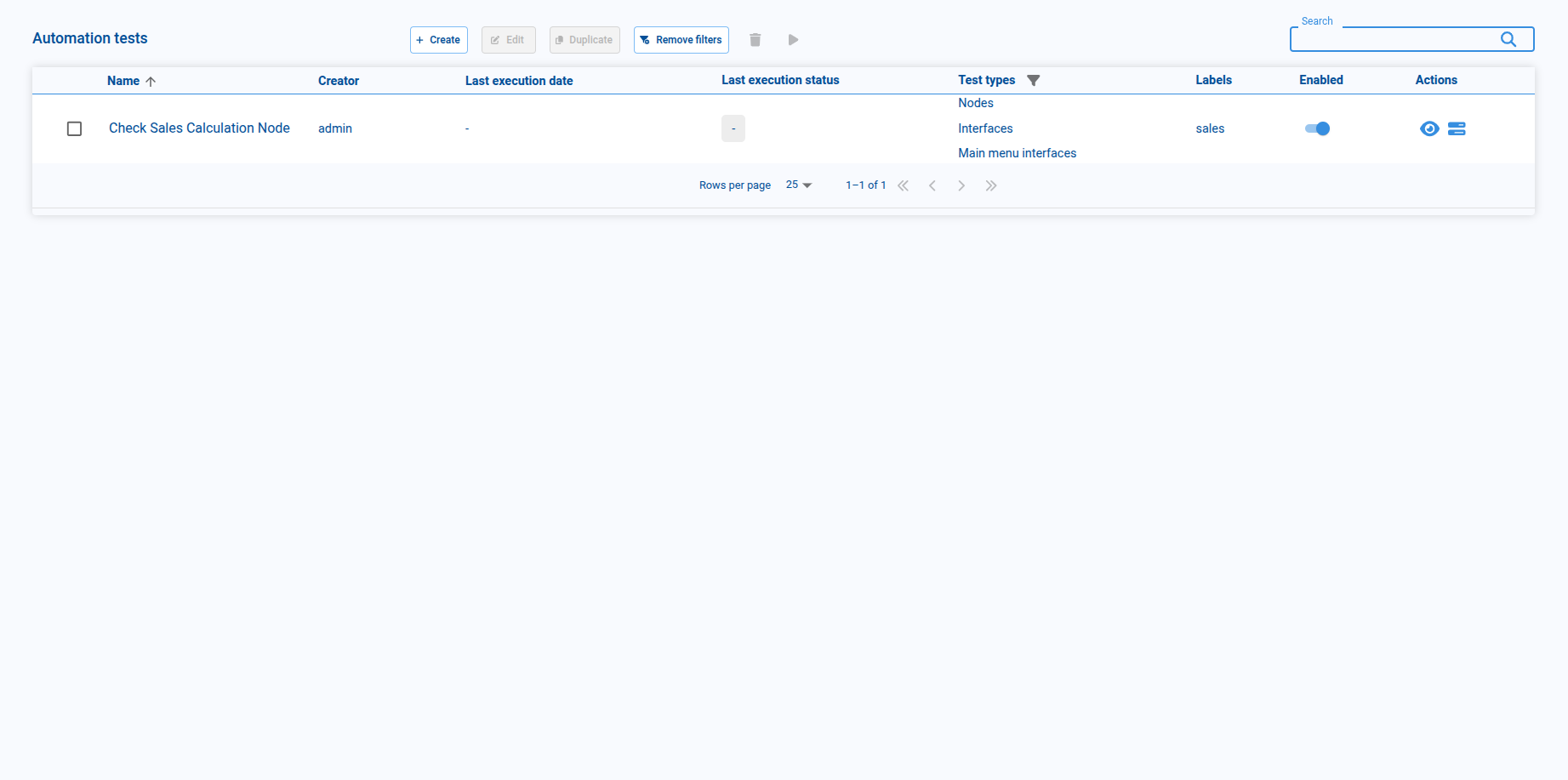

The Automation Test Manager is the main screen where we list and control all automation tests defined in a Pyplan application.

From this view we can:

- Create, edit, and duplicate tests.

- See key information: Name, Creator, Last execution date, Last execution status, Test types, Labels, and whether the test is Enabled.

- Use Actions on each row:

open a read‑only view of the preconditions configured for that test.

open the execution logs for that test to review previous runs and errors.

When we select a test, the toolbar actions at the top becomes active:

to remove the selected test.

to execute the selected test.

¶ Create a test

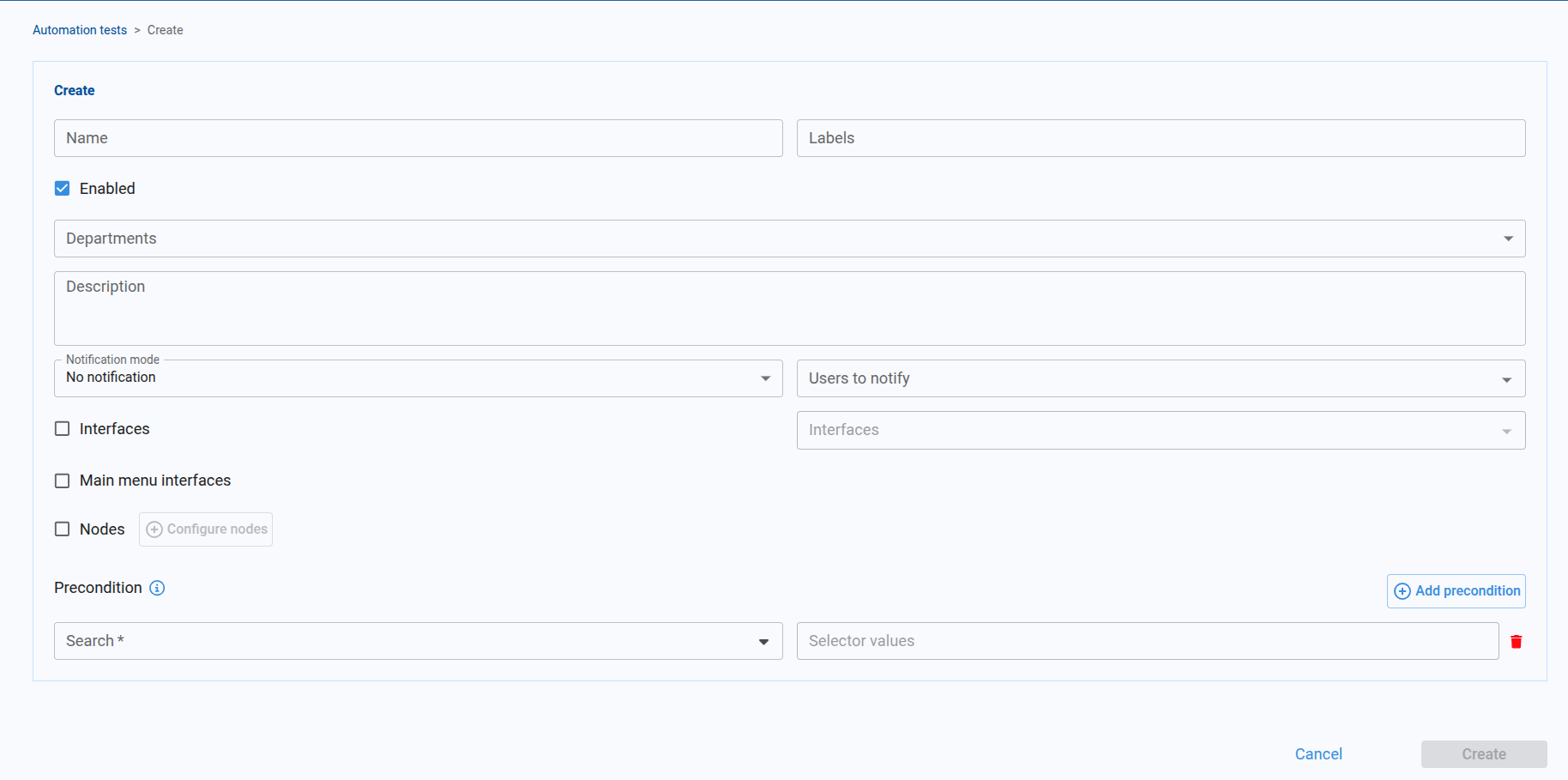

The Create test screen is where we define a new automation test from scratch. From this page we configure the test’s identity, how notifications are sent, what the test will validate and any preconditions that must be met before execution.

On Automation Tests page, click Create to open the Create Automation Test form.

We now see the configuration form similar to the provided screenshot.

¶ Parameters

Name

The display name of the automation test.

Labels

Optional tags to group and search automation tests

Enabled

A checkbox that controls whether the test is active.

Departments

Dropdown list to associate the test with one or more departments.

Description

A free-text field to describe what the test does and what success means.

Notification mode

A dropdown that defines if and when notifications are sent.

Available options:

- Always send emails - Pyplan sends an email every time the test finishes, whether it passes or fails.

- Send emails only on failure - Pyplan sends an email only when the test result is a failure. This is usually the best option for production or high‑volume tests to reduce noise.

- No notification - Pyplan does not send any emails, regardless of the test result.

Users to notify

A field or control to select the email recipients for test notifications.

Interfaces

When checked, this test is linked to one or more specific interfaces.

After checking Interfaces, we have an Interfaces dropdown to choose which interfaces to include.

Main Menu Interfaces

Targets high-level interfaces that appear in the main menu.

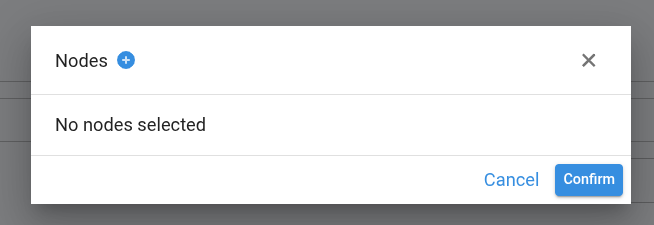

Nodes

When checked, the test is associated with specific model nodes.

Configuration:

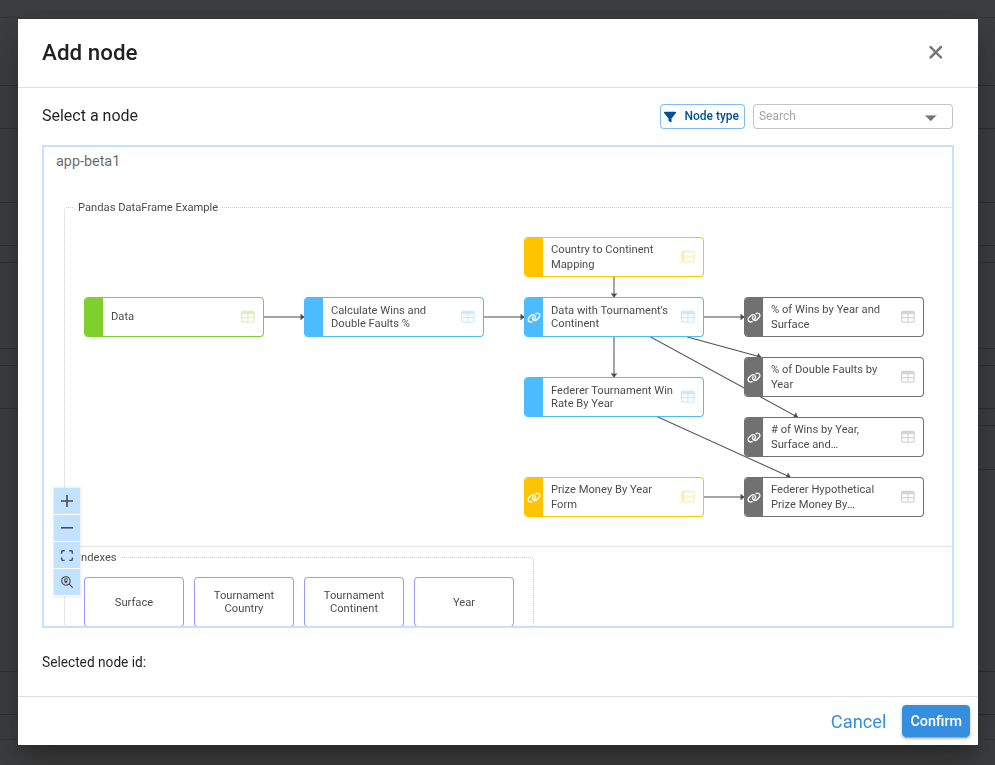

When you Click on "Configure nodes" button, a dialog will appear.

In the Nodes dialog, click the next to the Nodes title.

This opens the Add node window.

In the Add node window:

- Navigate the influence diagram to locate the node we want to validate.

- We can zoom and move around the diagram as usual.

- Click once on the node to select it.

The node identifier appears at the bottom as Selected node id.

Click Confirm in the Add node window.

We return to the Nodes dialog, where the selected node now appears in the list.

At this point, the node is added to the test, and we can configure how it will be checked.

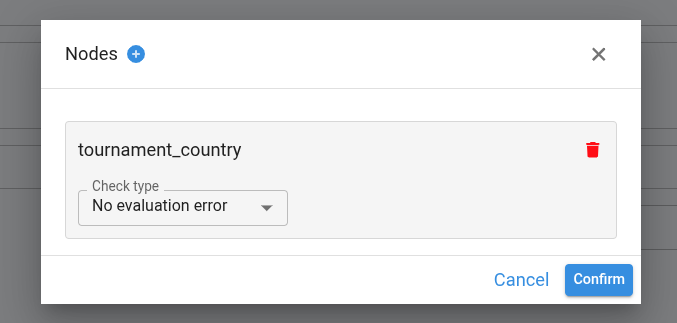

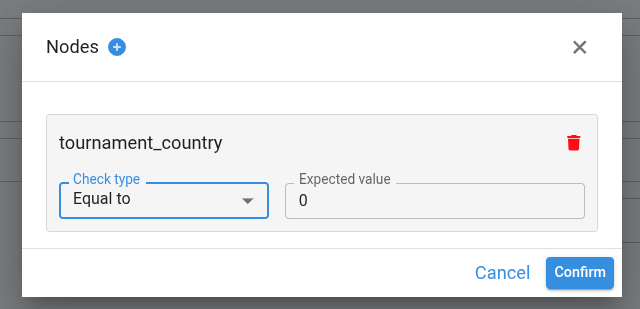

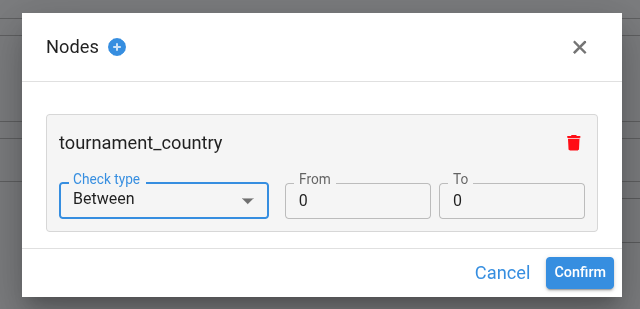

Available check types:

- No evaluation error - Verify that the node evaluates without errors.

- Equal to - Verify that the node’s result is equal to a specific expected value.

- Between - Verify that the node’s result stays within a defined numeric range.

After we finish configuring all node checks, click Confirm in the Nodes dialog.

Under the Nodes checkbox and the Configure nodes button, Pyplan displays one chip for each configured node.

Preconditions

Define the conditions that must be met before the test runs. They typically use selector nodes from the application

Adding A Precondition:

- In the Precondition area, open the Search dropdown.

- Select the selector node we want to constrain

- In Selector values, specify the required value or list of values.

The information we enter is stored as part of the test configuration.

We use Add precondition button only when we want to create another, separate precondition (a new selector node with its own values).

After we configure all desired options, Click Create to save the automation test.

Once created, the test appears in the Automation Tests list,

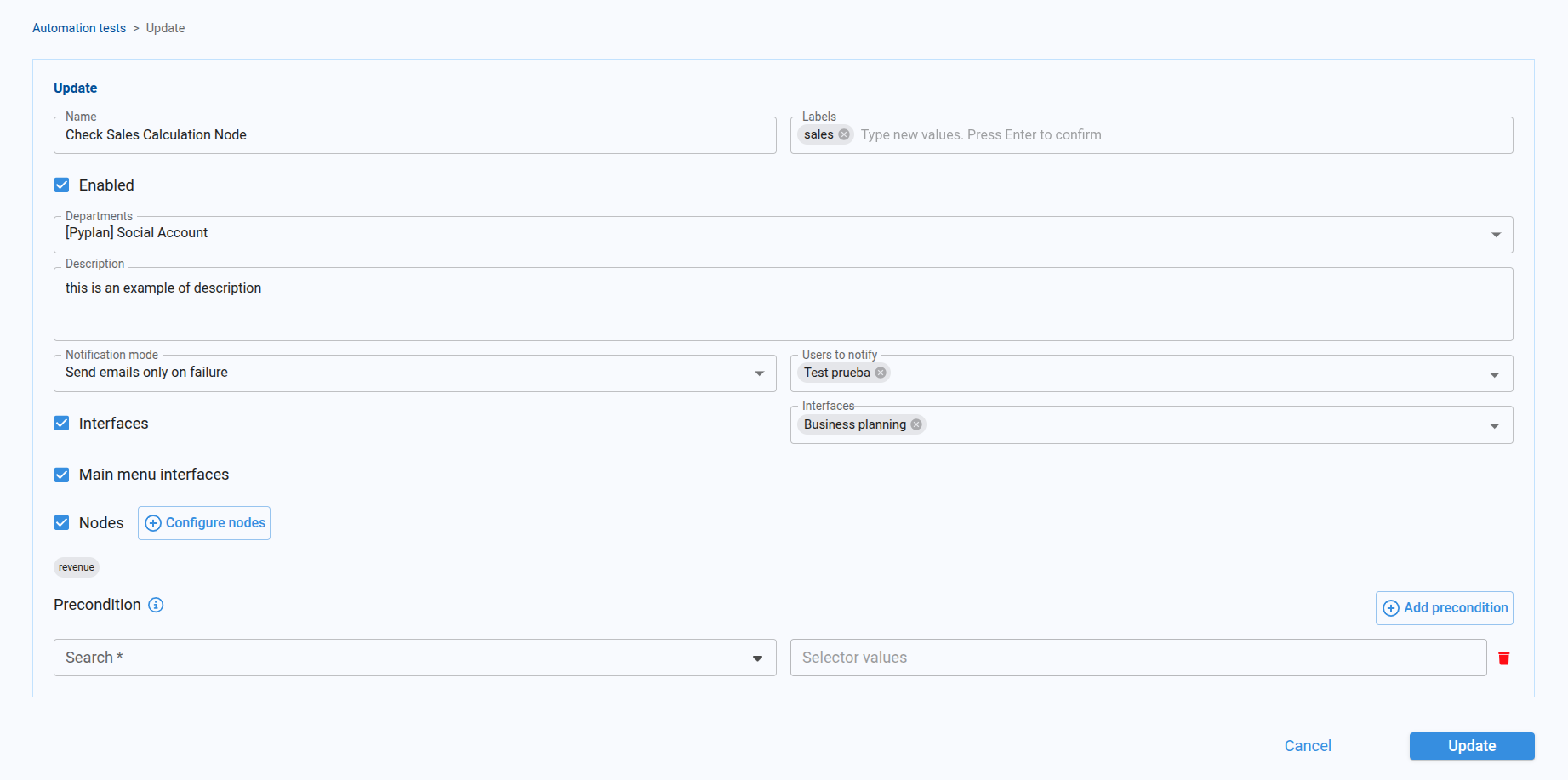

¶ Edit a test

The Edit test screen lets us modify an existing automation test using the same fields and options as the Create screen.

On the Update page we can:

- Change general information: Name, Labels, Departments, Description, and Enabled status.

- Adjust Notification mode and Users to notify.

- Add or remove Interfaces, Main menu interfaces, and Nodes (including updating node checks via Configure nodes).

- Review and modify Preconditions

When we finish, we click Update to save the new configuration, or Cancel to discard any changes.

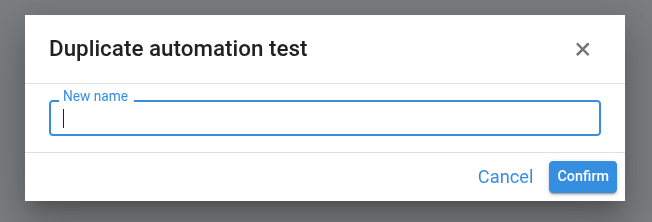

¶ Duplicate a test

The Duplicate function in the Automation Test Manager lets us quickly create a new test based on an existing configuration.

How It Works

- In the Automation Test Manager, select one test

- Click Duplicate.

A dialog titled Duplicate automation test opens.

- In the dialog:

- Enter a New name for the duplicated test.

- Click Confirm to create the duplicate, or Cancel to close the dialog without changes.

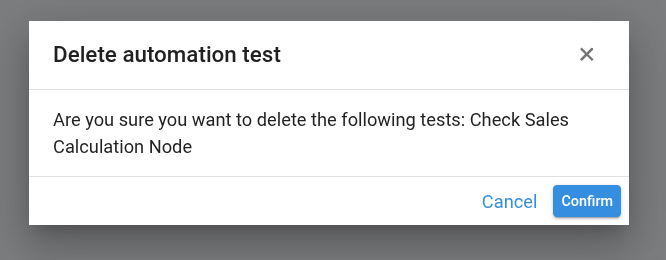

¶ Delete a test

The Delete function in the Automation Test Manager lets us remove one or more automation tests at once.

How It Works

- In the Automation Test Manager, select the tests we want to delete, we can select one or multiple tests.

- Once at least one test is selected, the button in the toolbar becomes enabled.

- Click Delete and a confirmation dialog titled Delete automation test opens, listing the names of the tests that will be removed.

- In the dialog:

- Click Confirm to permanently delete all selected tests.

- Click Cancel to close the dialog without deleting anything.

After confirming, the selected tests are removed from the list

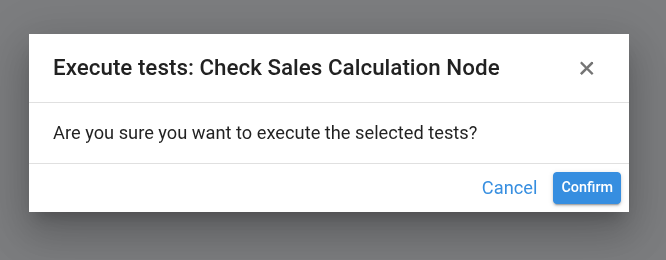

¶ Run a test

The Run action in the Automation Test Manager lets us trigger one or more automation tests on demand.

How It Works

- In the Automation Test Manager, select the tests we want to run.We can select one or multiple tests.

- Once at least one test is selected, the button in the toolbar becomes enabled.

- Click Run and a confirmation dialog appears.

In the dialog:

- Click Confirm to run all selected tests.

- Click Cancel to close the dialog without running anything.

When we confirm:

- The selected tests are not executed immediately.

- Instead, they are added to the application’s execution queue.

- Once executed, the test results can be reviewed later in the Logs view.

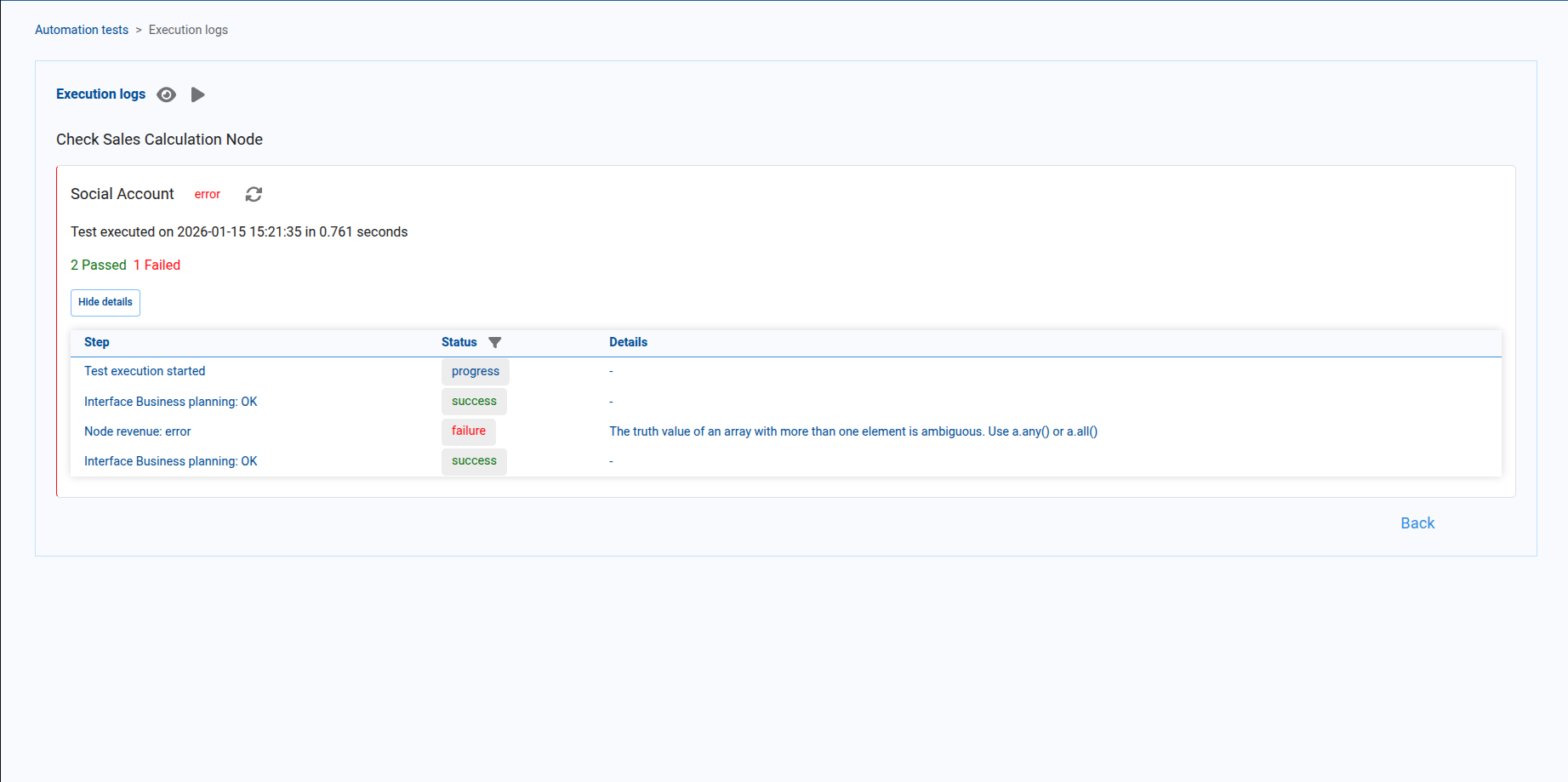

¶ Test Execution Logs

The Test execution logs view lets us inspect what happened during the last run of an automation test. This is where we diagnose failures and verify that each interface and node behaved as expected.

We access the logs from the Automation Test Manager:

- Locate the test in the table.

- Click the icon in the Actions column.

- We are redirected to the Execution logs page for that test.

Execution Logs Page Overview

In the Execution logs header we have two icon buttons: opens the test configuration in edit mode, and executes the same test again (adding it to the execution queue).

At the top of the page we see the test name and below it one card per department in the test, each card showing:

- The department under which the test ran and the last status.

- Last execution time.

- A summary of results passed and failed

- There is also a next to the department name, It manually refreshes the logs for that department.

- We can toggle the View details / Hide details button to show or collapse the full log table

In addition, the execution logs auto‑refresh every 10 seconds until the test finishes (either passed or failed).

Log Table

The main part of the page is a table that lists each step of the test execution.

Columns:

Step - Describes what is being checked.

Status - The outcome of the step

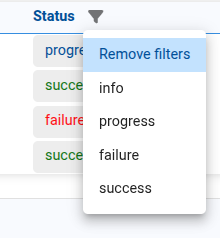

In the Execution logs table we can quickly focus on specific types of steps using the filter in the Status column.

Next to the Status header there is a filter icon.

When we click it, a menu opens with the available statuses.

We choose one of these options to show only the steps with that status.

This is especially useful when we want to review only the failed steps for example.

The table will now display only the steps where the test failed, making it easier to identify and analyze issues.

To return to the full list of steps, we open the same menu and choose Remove filters.

Details - Additional information about the step.For failures, it typically shows the error message or a short explanation.

¶ pp.execute_tests function

The pp.execute_tests function lets us programmatically run one or more automation tests directly from a Pyplan node.

Base signature:

pp.execute_tests(test_ids=None, detached_run=False)

Parameters

-

test_ids : List of test IDs to execute. If not provided, all tests are executed.

-

detached_run : If True, the tests are executed in detached mode. This can be useful for a periodic task that runs tests. Otherwise, tests are run using the current instance

Returns

A dictionary with test IDs as keys and their execution results as values.

Example

pp.execute_tests(test_ids=['test1', 'test2', 'test3'])