Pyplan is a platform designed so that users without programming skills can build and share Data Analytics and Planning applications.

Building an application always starts with data input, which can be done manually or by reading from an external data source.

¶ Manual Data Input

We create manual inputs by dragging an Input data node onto the influence diagram.

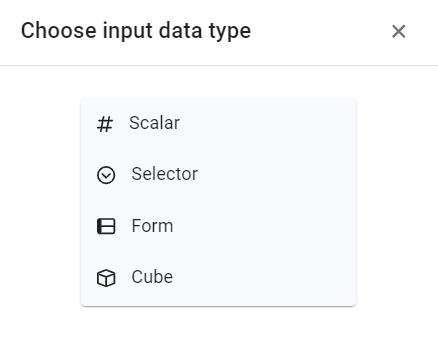

After we give the node a title, Pyplan opens a wizard where we choose and configure the type of manual input we want to use.

¶ Scalar Input

A Scalar Input is used to enter a single parameter (for example, a discount rate, an exchange rate, or a growth assumption).

Once we define the node title, the Scalar Input appears in the diagram as a simple node representing that value.

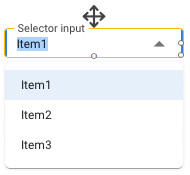

¶ Selector Input

A Selector Input is used to define a list of alternatives that will be shown in a drop‑down selector (for example, scenario, region, product line).

After creation, the node appears in the diagram and provides a selectable list that can be used by other nodes in the model.

¶ Form Input

The Form Input is the most powerful and flexible manual data‑entry option. It lets us:

- Combine input columns (where users type values) with

- Calculated columns that act as references or guides for the data being entered.

For example, in a sales budgeting tool, we can show last year’s sales as a reference column next to the cells where users enter the new budget.

Form data is stored in a database, which enables multiple users to enter or update data simultaneously.

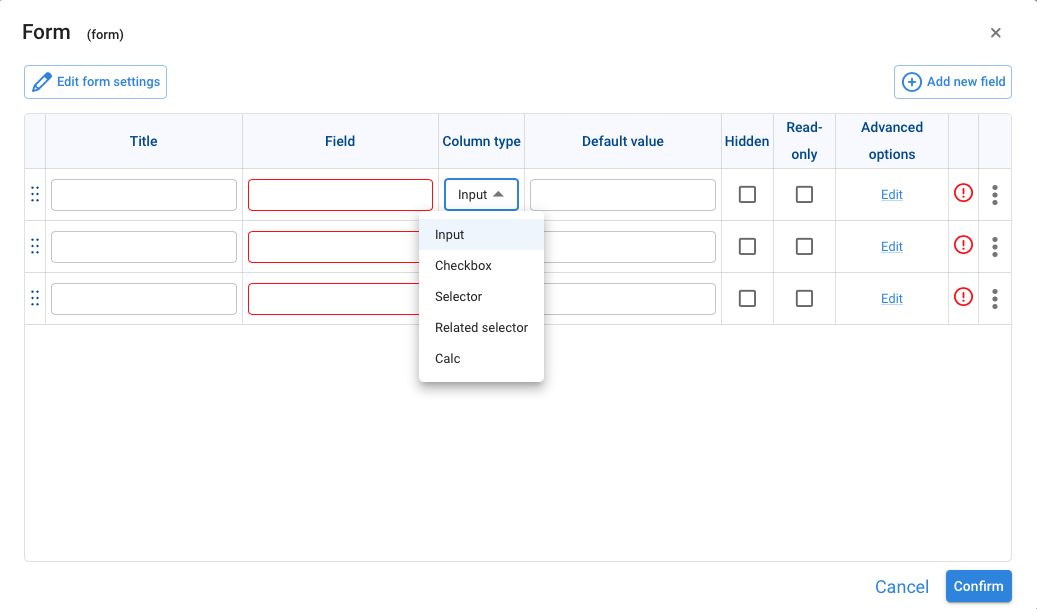

When we drag an Input data node and choose Form as the input type, Pyplan opens a configuration wizard. In this wizard we:

- Define a title for each input field.

- Pyplan suggests a field name automatically based on the title.

- We then choose the column type from the options in the drop‑down list (for example, number, text, date), and complete the configuration of the form.

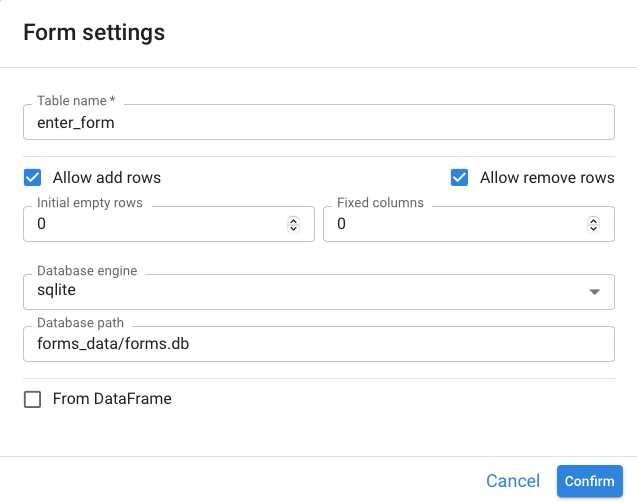

When we create a Form Input node, Pyplan opens the Form settings window. Here we configure how the form will behave and how its data will be stored.

Table name*

Name of the table where the form data will be stored in the database.

- It must be unique within the database.

- By default, Pyplan usually proposes a name based on the node Id.

Allow add rows

When enabled, users can add new rows in the form (for example, new products, customers, or records).

Allow remove rows

When enabled, users can delete existing rows from the form.

Initial empty rows

Number of blank rows that will be created when the form is first opened.

- Useful when we want to present a pre‑defined number of rows ready to be filled.

Fixed columns

Number of columns that will be considered fixed.

- Fixed columns cannot be removed by end users and typically hold key information such as IDs, codes, or descriptions.

Database engine

Storage engine used for the form data. In the image it is set to sqlite.

- Other engines may be available depending on the configuration (for example, PostgreSQL or a custom engine).

Database path

Physical path or connection string to the database where the form table will be stored.

- For SQLite this is usually the path to a

.dbfile (e.g.,forms_data/forms.db).

From DataFrame

When this option is checked, the form can be initialized based on the structure of an existing DataFrame (columns and, optionally, data).

- This is useful when we already have a DataFrame in another node and want to turn it into an editable form.

After configuring these options, we click Confirm to create the form with the specified behavior and storage settings.

¶ Cube input

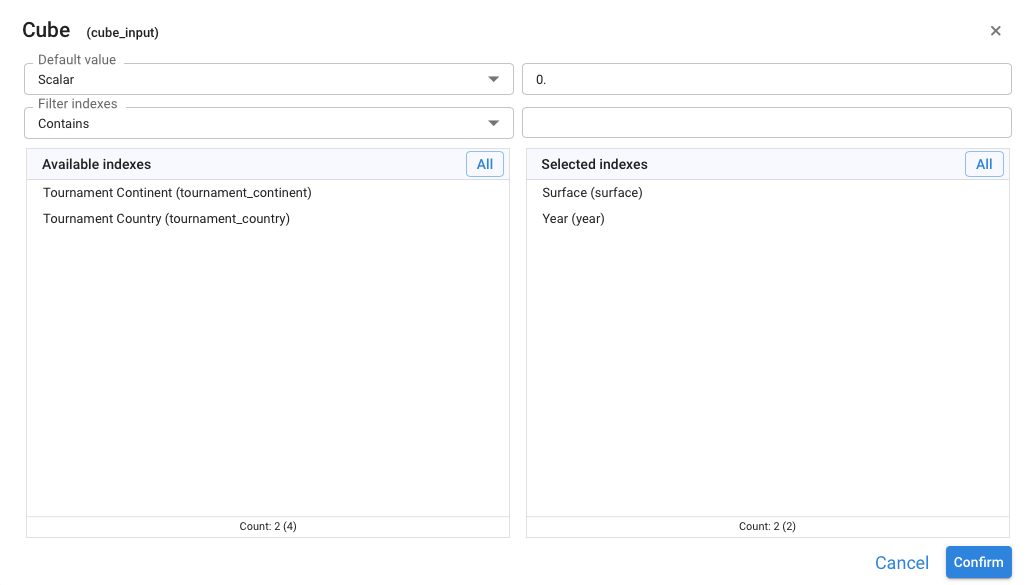

A Cube input node lets us enter a single value for every combination of a set of dimensions (indexes). When we create or edit a Cube input, Pyplan opens the Cube settings dialog shown in the image.

The dialog has four main parts:

Default value (top‑left)

- Defines the value that will be used initially for all cells in the cube.

- The first drop‑down lets us choose the type of default value, typically:

- Scalar – a literal value we type in the box on the right (for example

0,1.0,'N/A'). - App node – use the result of another node as the default (not shown in the screenshot but available as an option).

- Scalar – a literal value we type in the box on the right (for example

Filter indexes (top‑middle)

- Optional filter to help us search indexes when there are many.

- We choose a criterion (for example, Contains) and then type text to filter the list of indexes by name.

Available indexes (bottom‑left)

- Lists all Index nodes in the application that can be used as dimensions of the cube.

- Each item shows the index title and its identifier in parentheses, for example:

Tournament Continent (tournament_continent)Tournament Country (tournament_country)

- We move indexes from here to the cube by selecting them and using the usual selection controls (double‑click, buttons, or keyboard shortcuts).

Selected indexes (bottom‑right)

- Shows the indexes that will define the dimensions of this Cube input.

- These dimensions determine the axes of the input table where users will enter values.

Once we have chosen:

- The default value (type and value), and

- The set of selected indexes that define the cube dimensions,

we click Confirm to create or update the Cube input. The resulting node will allow users to enter one value for each combination of the selected dimensions (for example, one value per Surface × Year pair).

¶ Data source reading

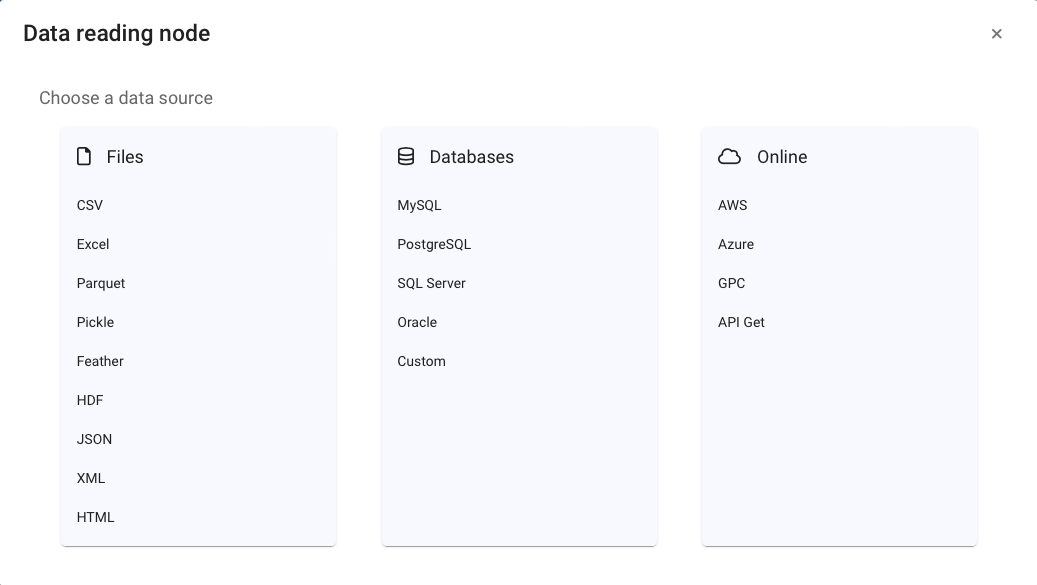

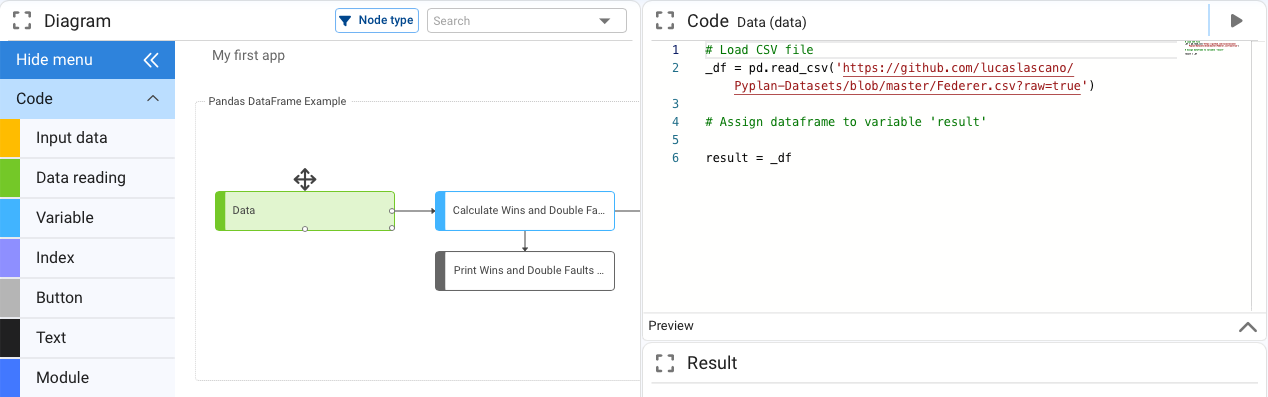

Another way to load data into Pyplan is by connecting to external data sources, which are read when the node’s code is executed.

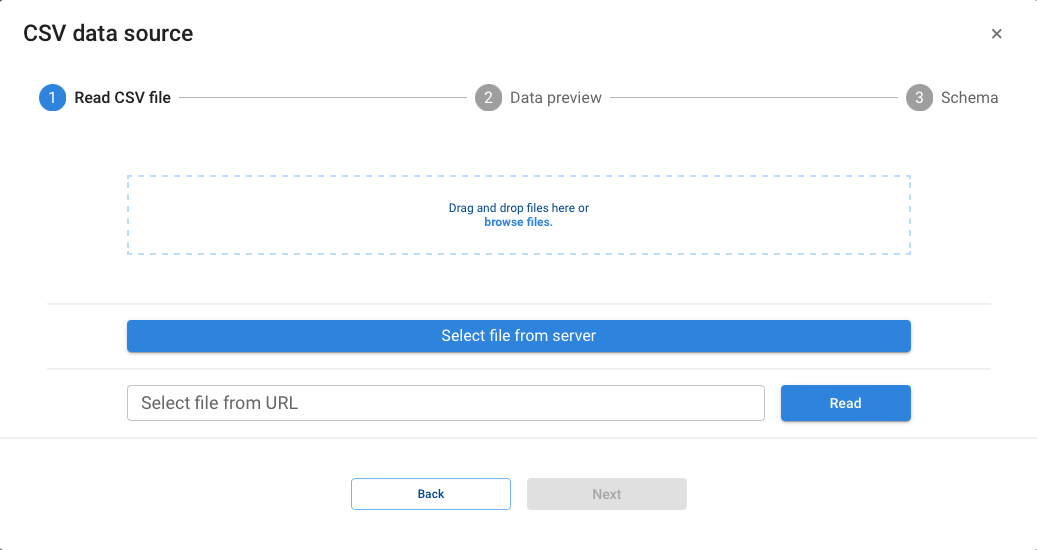

To do this, we drag a Data reading node onto the diagram. After we define its title, Pyplan opens a dialog where we choose the source type and configure the connection.

The most common options (such as CSV and Excel) have dedicated wizards that guide us through all the reading parameters (file path, separator, headers, sheet name, etc.).

Less frequently used options are initialized with base code. We then complete the required parameters in that code to perform the corresponding data read.

¶ Data Manipulation and Operations

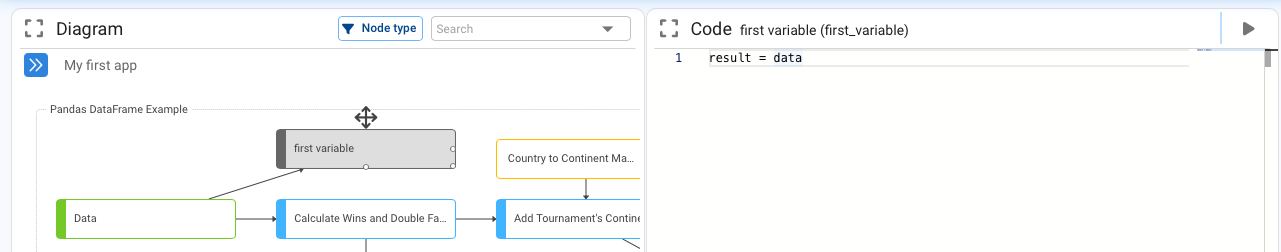

Once we have created our input nodes (manual or from data sources), the next step is to analyze and process that data. For this we use Variable nodes.

A Variable node is the most general node type: its Definition can contain any Python code. When we drag a Variable node onto the diagram and give it a title, it starts with a simple default definition:

result = 0To connect this new Variable node to another node that will act as its data source, we have two options:

- Type the node Id directly in the Definition (coding window).

- Place the cursor where we want to insert the reference and, while holding Alt, click the source node in the diagram. Pyplan automatically inserts that node’s Id into the Definition.

After we confirm the changes:

- An arrow appears between the nodes, showing the dependency.

- If the Variable node has no further outputs, its color changes to gray, indicating that it behaves as a report or terminal node (no other nodes depend on it).

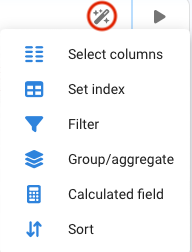

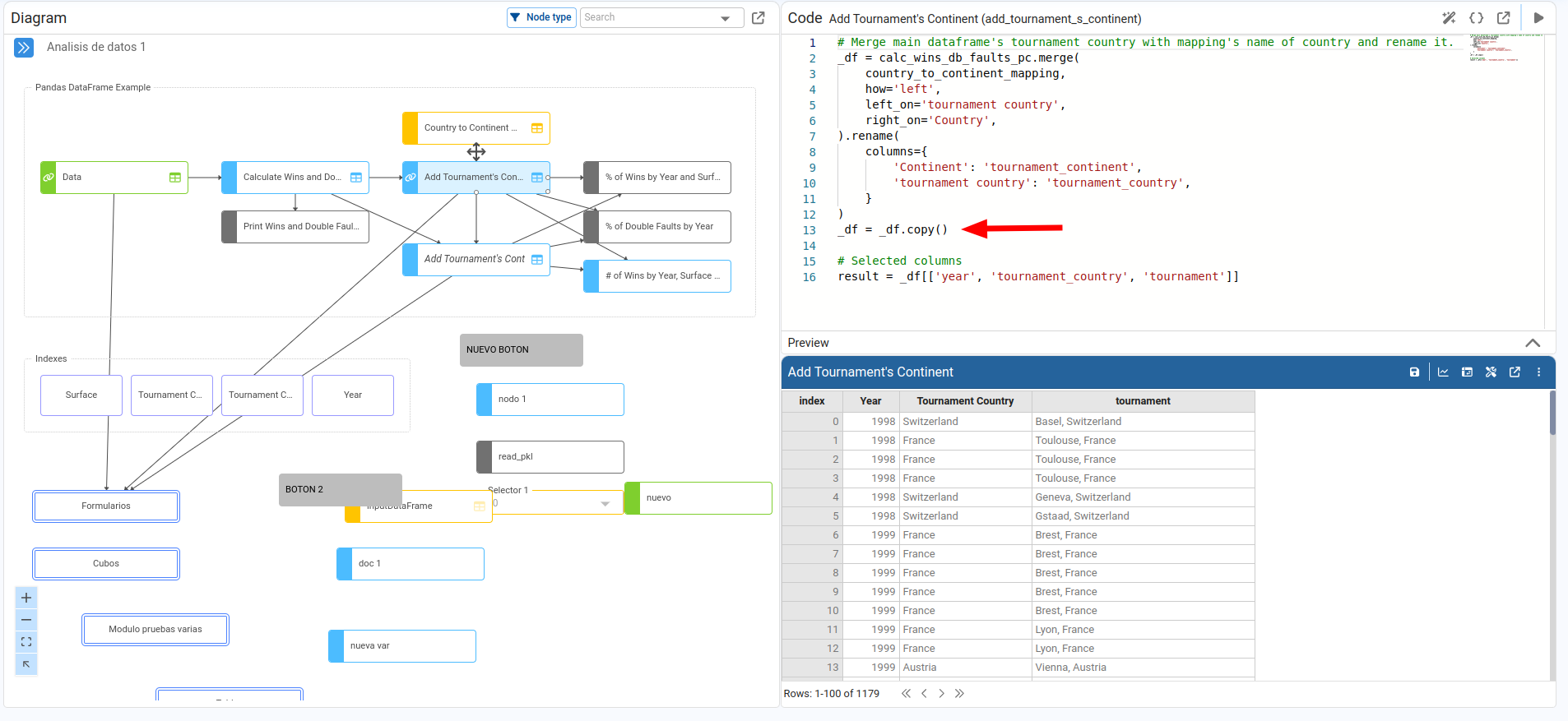

Although we can write any Python code directly in a Variable node, Pyplan also provides a set of Wizards to help us perform common data‑transformation tasks through guided interfaces.

- The available wizards depend on the data structure returned by the node (for example, DataFrame, DataArray, etc.).

- Because of this, we must evaluate the node first so that Pyplan can detect the result type and determine which wizards apply.

Once the node has been executed, we can open the wizards by clicking the Wizards icon in the node tools bar.

When we use a wizard, Pyplan automatically updates the node Definition with the appropriate Python instructions to perform the selected operation. This works similarly to recording macros in a spreadsheet: it lets users who do not yet know Python learn its functions and syntax while still being able to build complex transformations.

¶ Indexes

Indexes (or dimensions) define how data is structured. They act as the row and column headers of a table and describe what each value at their intersection represents. Typical examples of indexes are lists of products, regions, or time periods. Indexes characterize the facts we work with and are used throughout the model to organize and aggregate data.

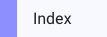

In Pyplan, we create indexes by dragging an Index node onto the influence diagram.

After we assign a title to the Index node, Pyplan opens a wizard to define its elements.

¶ List

The List option lets us enter index elements manually.

- We can type each element directly in the wizard, or

- Copy a list from an external table (for example, Excel) and paste it, specifying the position of the first element.

- If the pasted list is longer than the visible range, the index range is automatically extended to include all elements.

¶ Range

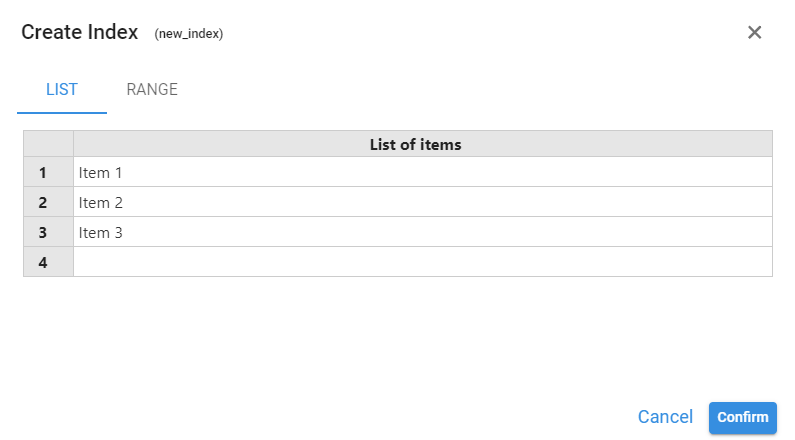

The Range option generates the index automatically by defining the parameters of a range. The range can be of type:

- Text

- Number

- Date

For example, we can create an index of years from 2020 to 2030, or months from January to December, without entering each element manually.

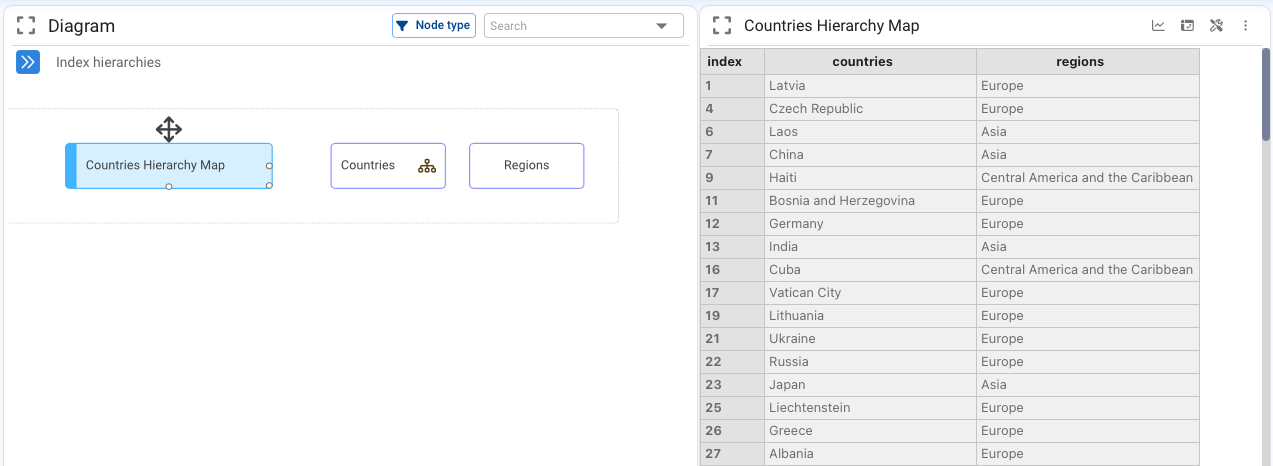

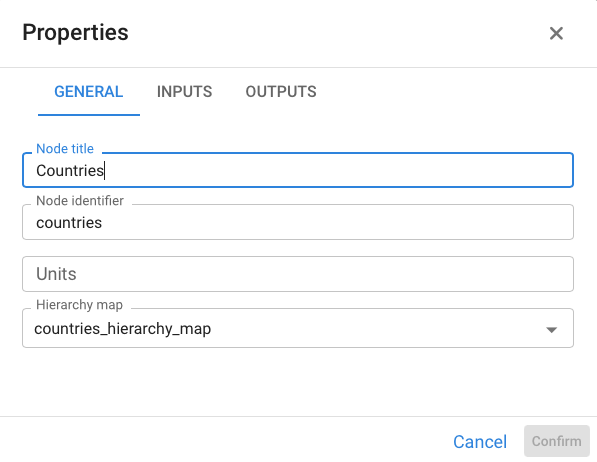

¶ Hierarchy of indexes

Indexes can also have hierarchies, that is, higher levels of aggregation. For example:

- A Country index may roll up into Region or Continent.

- A Month index may roll up into Quarter, Semester, or Year.

The relationship between a base index and its upper hierarchy is defined through a mapping table: for each element of the lower‑level index we indicate the corresponding element of the higher‑level index.

For instance, a table can link each country in a Countries index to its region in a Regions index.

By right‑clicking the lower‑level index node and opening its Properties, we can see which table stores this correspondence with the upper hierarchy.

Any index that has a hierarchical relationship is marked with a special icon inside the node, as shown for the Countries node in the example. This visual cue helps us quickly identify which indexes participate in hierarchies within the model.

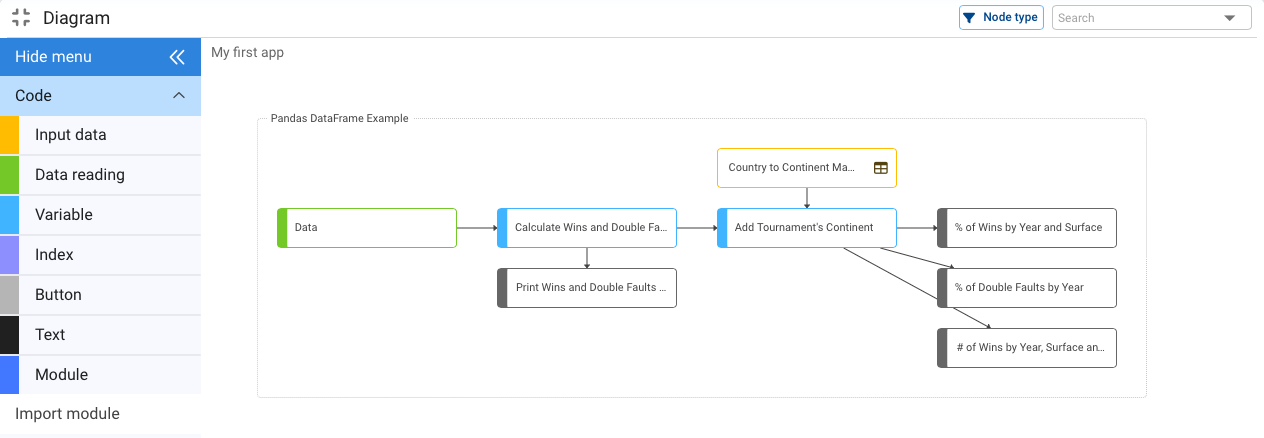

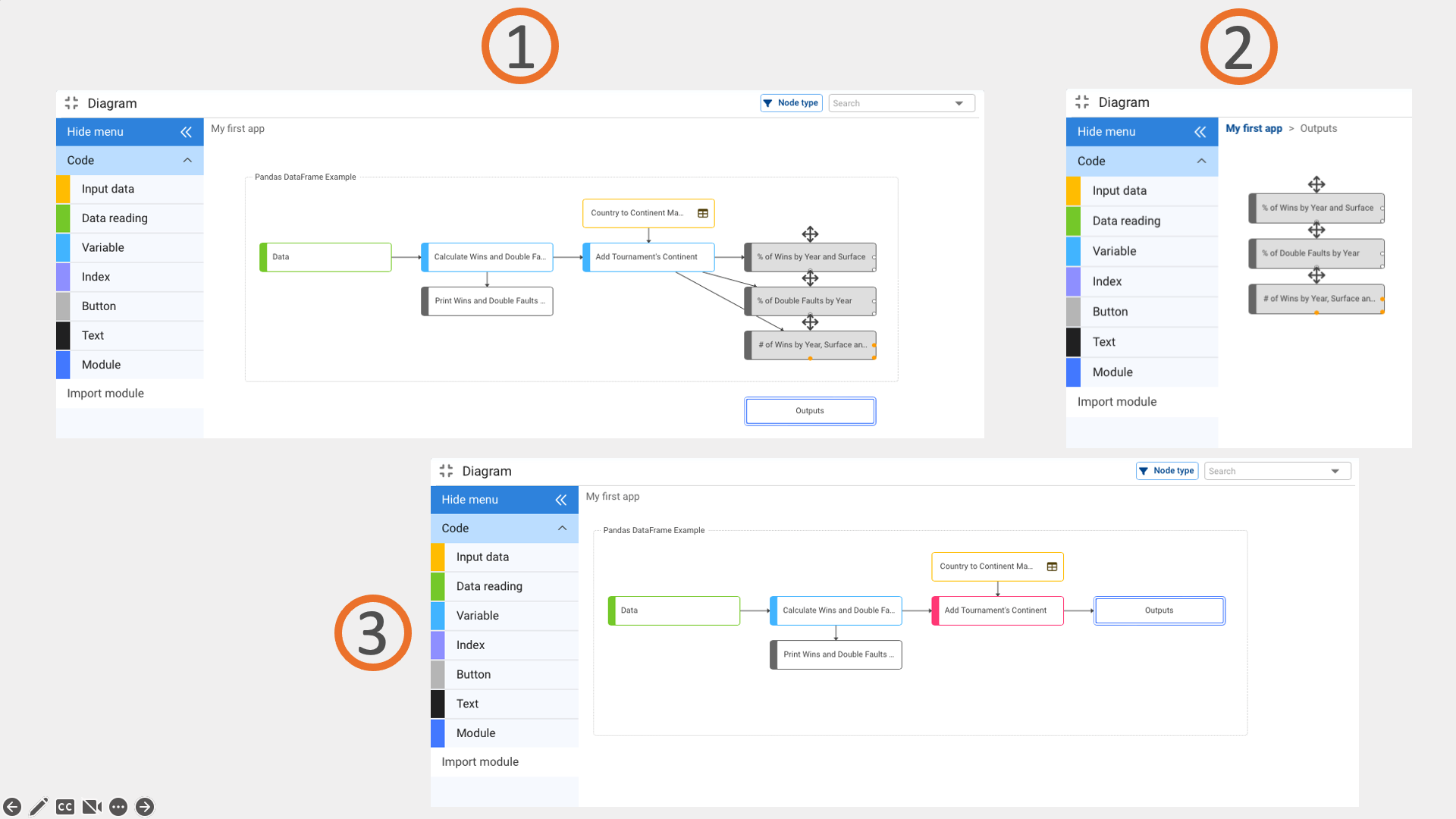

¶ Organization of the diagram

The diagram, or workflow, is how code is organized in Pyplan. A good convention for readability is to keep the flow of arrows (information flow) going from left to right and from top to bottom.

Besides using clear node titles to summarize each step, we can also place text nodes to group or explain sections of the diagram and make the logic easier to understand.

As a general rule, it is preferable not to exceed 20 nodes in a single diagram. When a diagram starts to grow beyond that, we recommend using Modules to group nodes that share a common purpose.

For example, if we have several output nodes, we can:

- Create a module named “Outputs”.

- Cut the existing output nodes.

- Paste them inside the new module.

This keeps the main diagram clean and lets us navigate to detailed sections only when needed.

¶ Node coloration

Nodes are automatically colored to help us quickly understand their role:

- Most node types keep the same color they have in the node palette from which we drag them.

- Variable nodes are the only exception: their color changes according to their function in the diagram:

- Light blue – the node is part of an ongoing calculation process and has outputs in the same module.

- Gray – the node has no outputs (it behaves like a report or terminal result).

- Red – the node has outputs outside the module that contains it, indicating cross‑module dependencies.

These colors provide a quick visual cue about where calculations start, propagate, and end.

¶ Node execution

ach node can be in one of two states: Not calculated or Calculated.

When we open an application, all nodes are initially Not calculated. No calculations are performed until we explicitly run a node (for example, by double‑clicking it, pressing the Play button, or using Ctrl+Enter).

When we execute a node, Pyplan:

- Recursively walks the influence diagram upstream, checking whether all input nodes needed by the target node are already calculated.

- If any input node is not calculated, Pyplan goes one step further back and repeats the check.

- Once it reaches either the application boundary or a chain of nodes that are already calculated, it evaluates the required nodes downstream, in the correct order, until the requested node is calculated.

This mechanism ensures that:

- The result of a node is always consistent, and does not depend on the manual order in which we previously executed other nodes.

- Only the necessary nodes are recalculated when an intermediate variable changes. Any node whose value is unaffected by the change is not re‑executed, which greatly improves performance.

We can inspect the Calculated / Not calculated status by selecting a node:

- In the Result view, Pyplan shows the node output if it is calculated.

- If not, it displays a message indicating that the node has not been calculated yet.

¶ Data structures supported

Pyplan natively works with a set of standard data structures such as tables and cubes, based on widely used Python libraries (Pandas, NumPy, xarray, etc.).

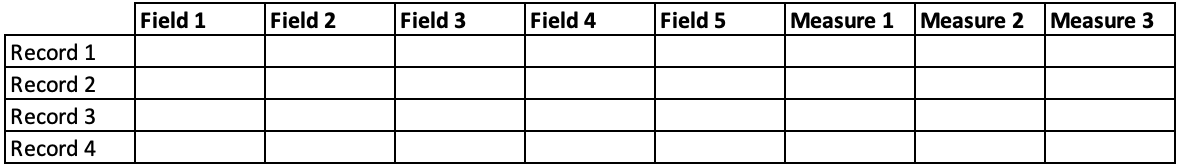

- Data tables follow the typical structure of a relational database table:

columns represent attributes or measures, and each row corresponds to a record. - Data cubes can have any number of dimensions. These dimensions may be named (labeled axes) or unnamed.

Most commonly used data structures

¶

Data tables

A table is similar to a database table: it is a data structure in which:

- each column represents an attribute or measure, and

- each row represents a specific record of those attributes or measures.

In Pyplan, data tables correspond to the DataFrame object from the Pandas library, one of the most widely used tools in Data Science.

- Pyplan provides wizards that implement common operations on DataFrames (sorting, filtering, aggregation, joins, etc.).

- Any additional transformation can be implemented directly in Python using the full Pandas API in the node Definition.

Pandas Quick Introduction

A quick introductory guide to Pandas functionalities can be found here.

¶ Assisted operations with Tables

When a node returns a table (a DataFrame) as its result, Pyplan automatically enables a set of wizards for that node.

For example, if we create a variable node called first_variable and set its Definition to:

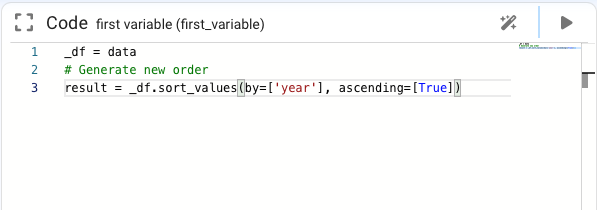

result = datathen run the node so that Pyplan can identify the result type, we can open the wizards and choose, for instance, Sort by Year.

Pyplan will:

- apply the requested operation to the DataFrame,

- update the node result accordingly, and

- modify the node Definition to include the corresponding Pandas code.

We can keep interacting with the table using wizards or by editing the generated code. By observing how each action changes the Definition, we progressively learn how to use Pandas directly in Python.

¶

Data cubes

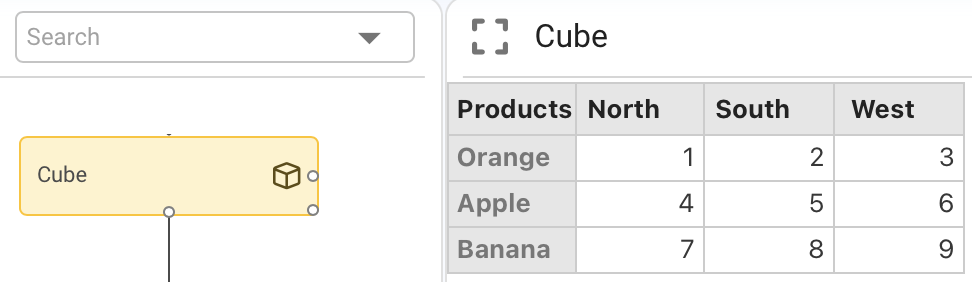

A data cube is also natively supported in Pyplan. The underlying object is DataArray from the xarray library.

A named data cube is a data structure that stores values indexed by n named dimensions. In Pyplan, these dimensions are called Indexes and are represented by Index nodes in the diagram.

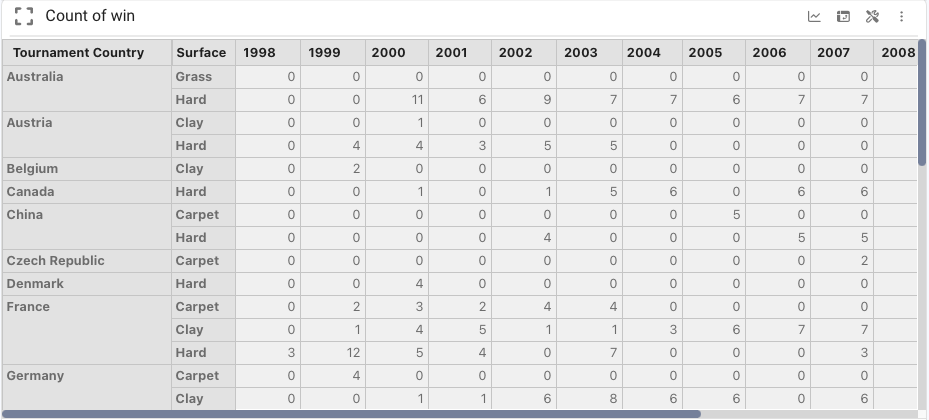

For example, we might define a data cube “Count of Win” indexed by the dimensions:

Tournament CountrySurfaceYear

In this case, each cell in the cube contains the count for a specific combination of [Tournament Country, Surface, Year].

Data cubes in Pyplan can be created in several ways:

- By transforming tables (

DataFrameobjects) into cubes. - By direct manual inputs (for example, using Input Cube or Input Table nodes).

- By performing operations between existing cubes (arithmetic operations, aggregations, reindexing, etc.).

These capabilities make tables and cubes the core data structures for modeling and analytics within Pyplan.

¶

Creating a Cube from a Data Table

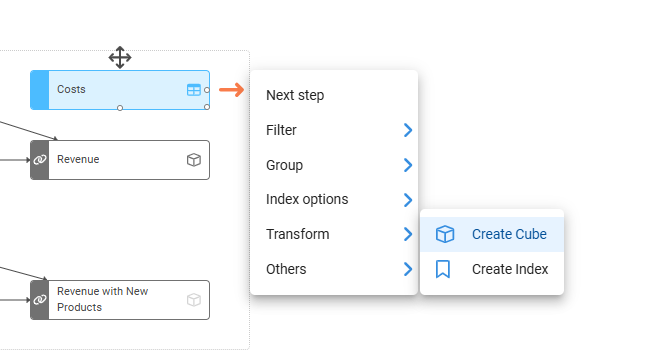

We can create a data cube directly from any node whose result is a data table (pandas.DataFrame).

- Click the arrow to the right of the node that returns the table.

- In the context menu, choose Transform → Create cube.

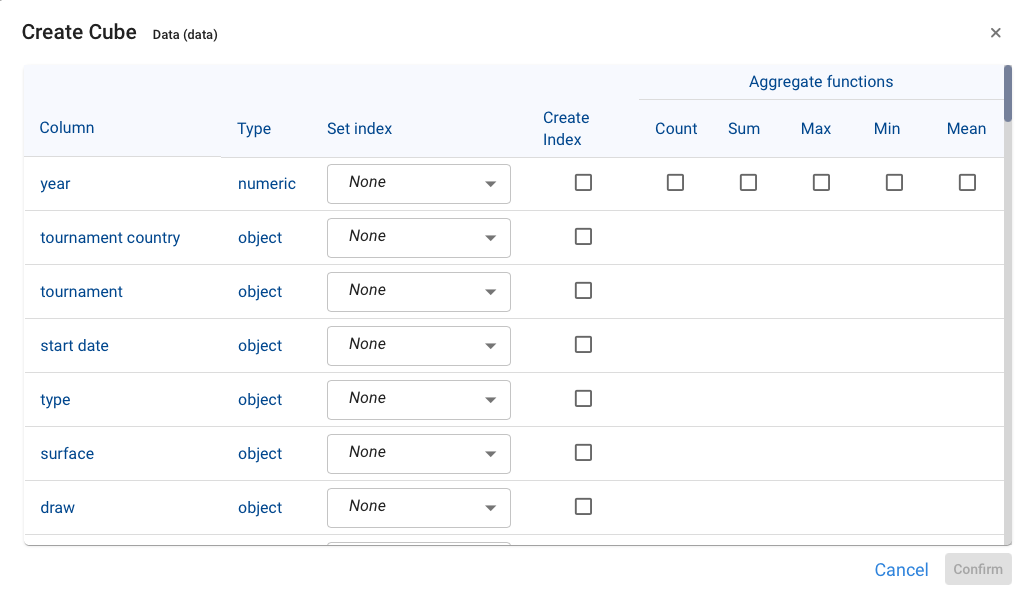

Pyplan then opens a dialog that shows, for each column of the DataFrame:

- The column name.

- The data type.

- The Index that will be used for that column (if one already exists).

- An option to create a new Index from that column when none exists.

- The aggregation function that will be applied to obtain the measure (fact) we want to represent in the cube.

To build a cube in Pyplan we must know in advance which dimensions (Indexes) will characterize it. The wizard helps us by letting us either select existing Index nodes or create new ones directly from the column values. Once the dimensions and aggregation functions are defined, the wizard generates an xarray.DataArray cube from the original table.

¶ Operations with data cubes

When a node returns a Data Cube (xarray.DataArray), Pyplan enables a set of cube wizards in the same place where table wizards appear. These assistants help us perform common transformations on cubes without writing all the code manually.

In addition, unlike tables, cubes support element‑wise mathematical operations between them, producing new cubes. Understanding how these operations behave is key to designing the desired calculation flow.

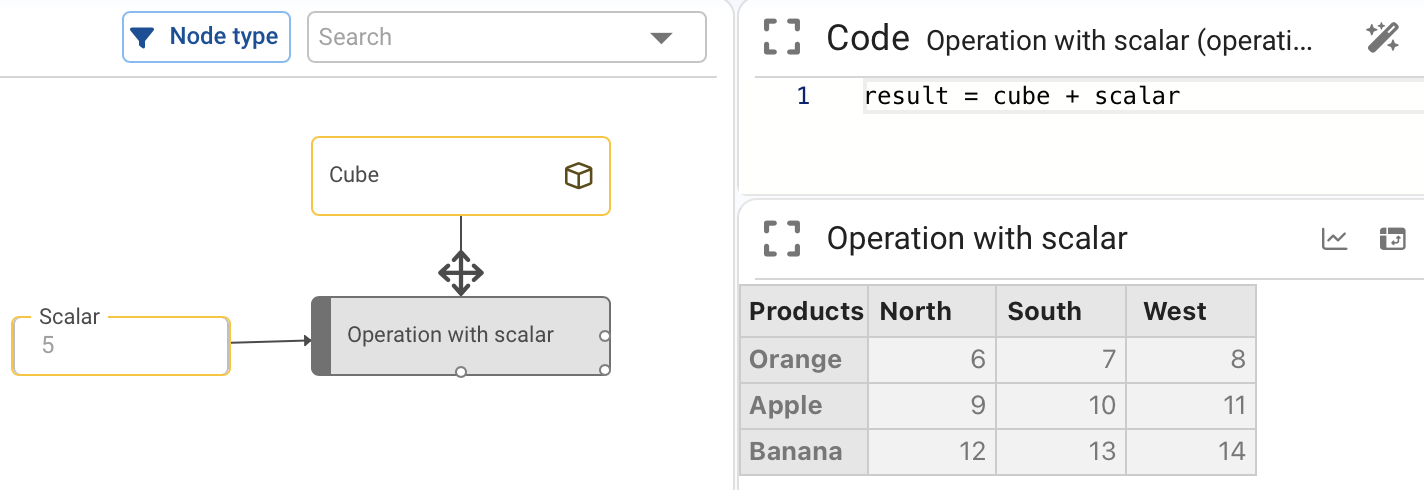

¶ Operations between a scalar and a cube

When we add, subtract, multiply, or apply any other arithmetic operation between a scalar and a cube, the operation is applied to every element of the cube.

Example node Definition:

result = cube + scalar

If scalar equals 5, then 5 is added to each cell of cube, and the resulting node returns a new cube where every value is original_value + 5.

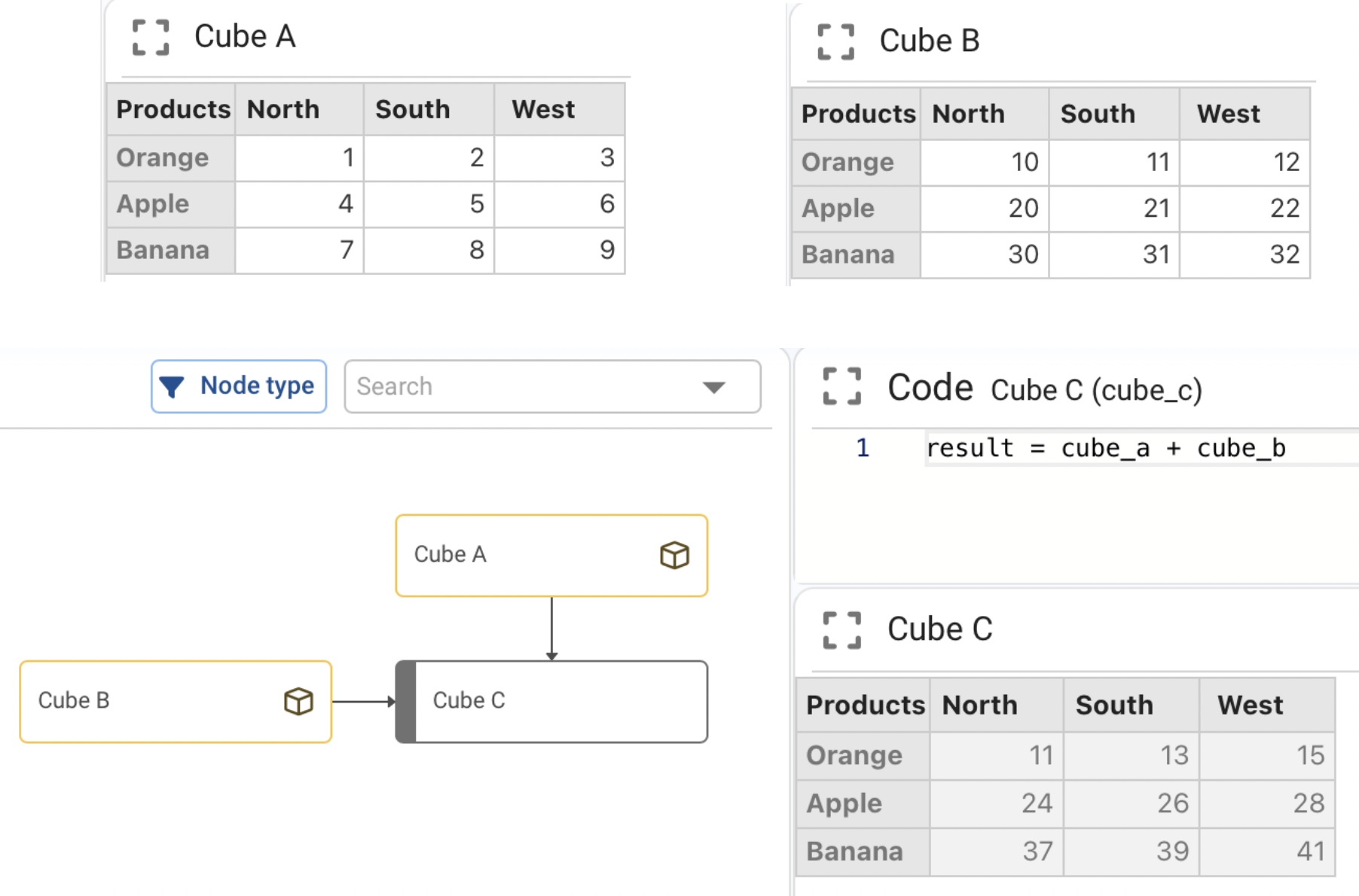

¶ Operations between two cubes of equal dimensions

When two cubes share exactly the same dimensions (same indexes and coordinate sets), the operation is performed cell by cell.

For example, if we compute

result = cube_a + cube_b

then the first cell of result is the sum of the first cell of cube_a and the first cell of cube_b, and so on for every coordinate combination.

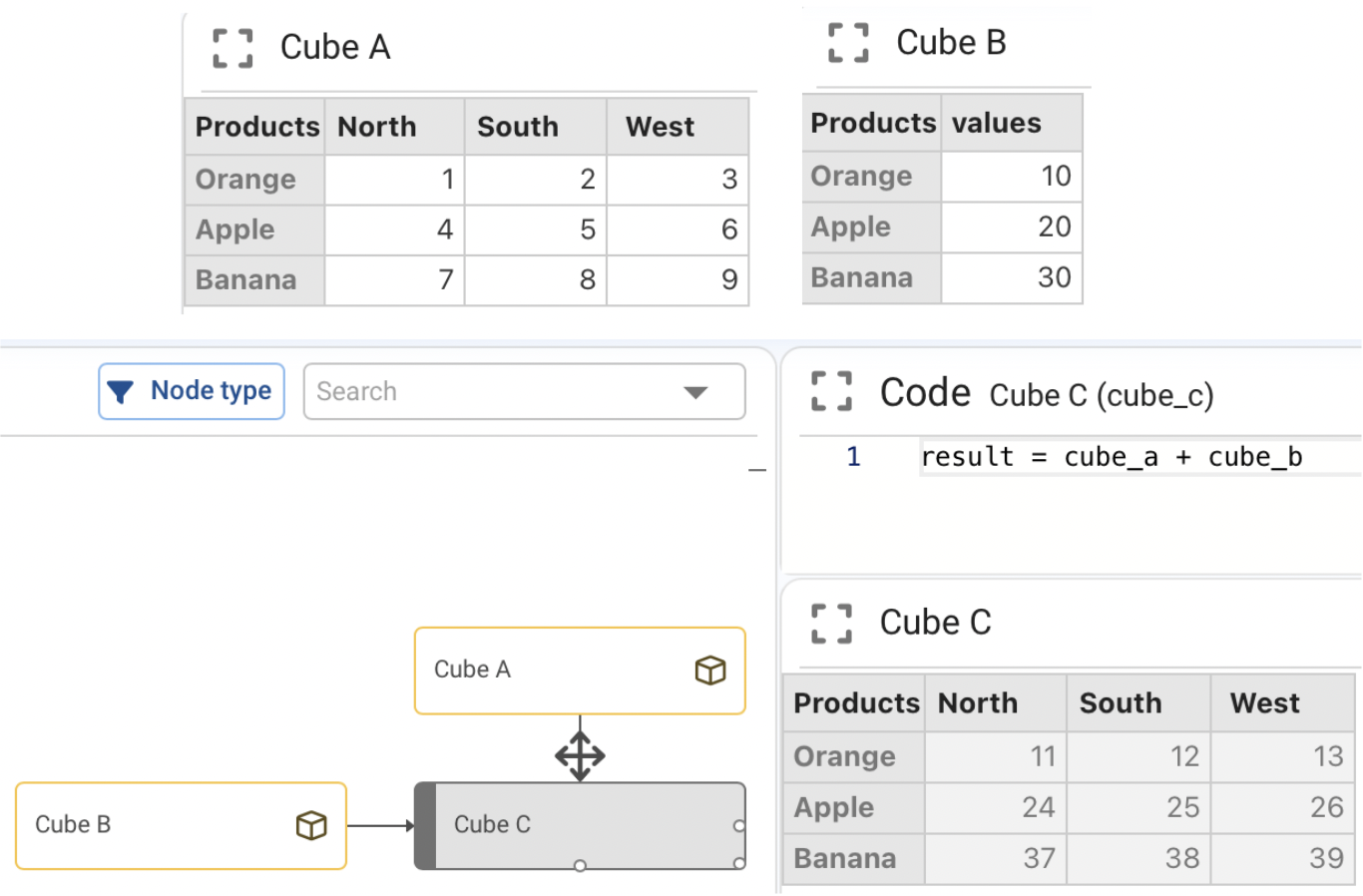

¶ Operations between two cubes of different dimensions

When operating on cubes that do not share all dimensions, the dimensions that are missing in one cube are treated scalar‑wise along those axes.

For example, suppose:

cube_ahas dimensions[Product, Region, Year].cube_bhas dimensions[Product, Year](noRegiondimension).

If we compute:

result = cube_a + cube_b

then cube_b is broadcast across the Region dimension: for each (Product, Year) pair, the same value from cube_b is added to all regions in cube_a. In other words, cube_b behaves as a scalar with respect to the missing dimension.

The complete list of supported operations and broadcasting rules for cubes is described in the xarray documentation: <https://docs.xarray.dev/en/stable/user-guide/computation.html>`_

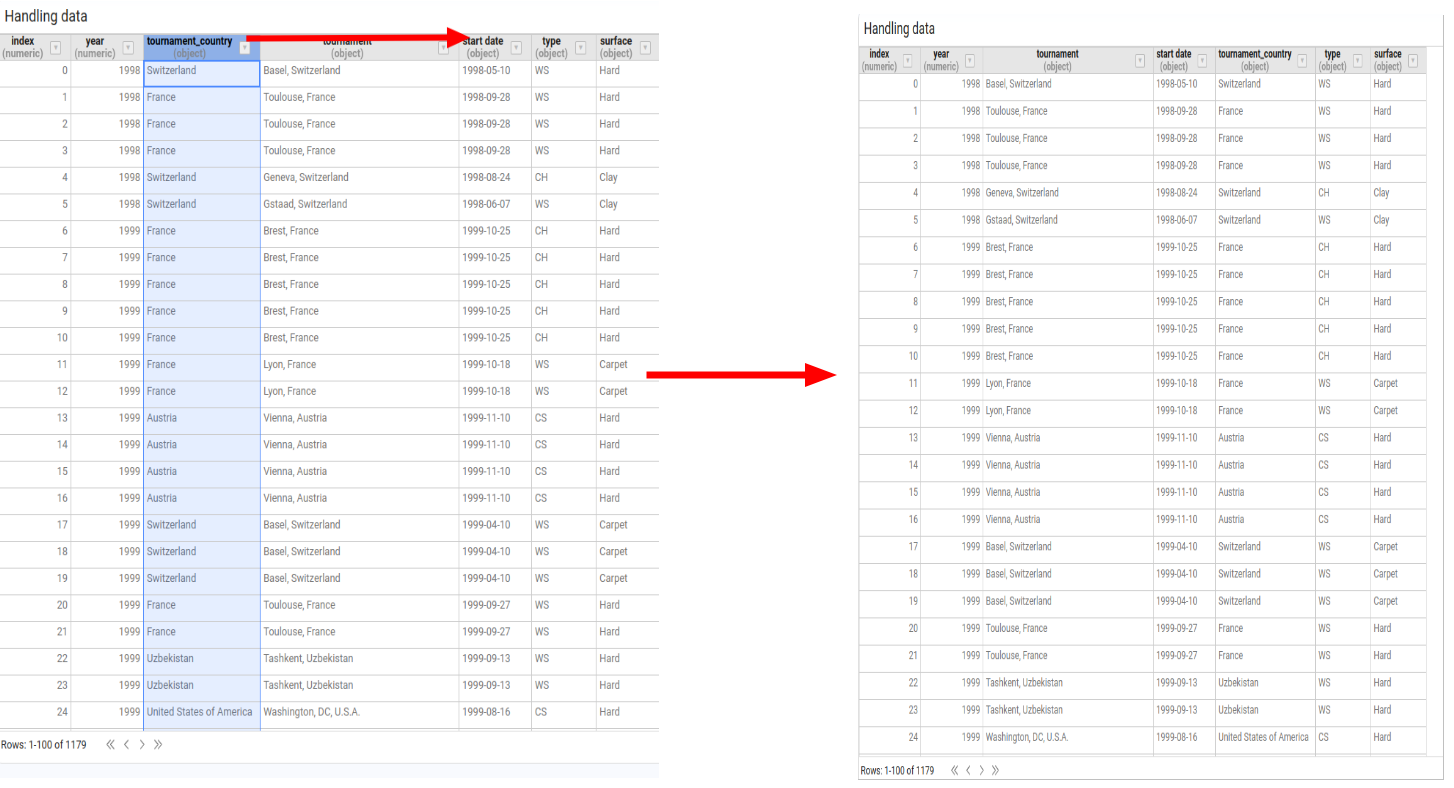

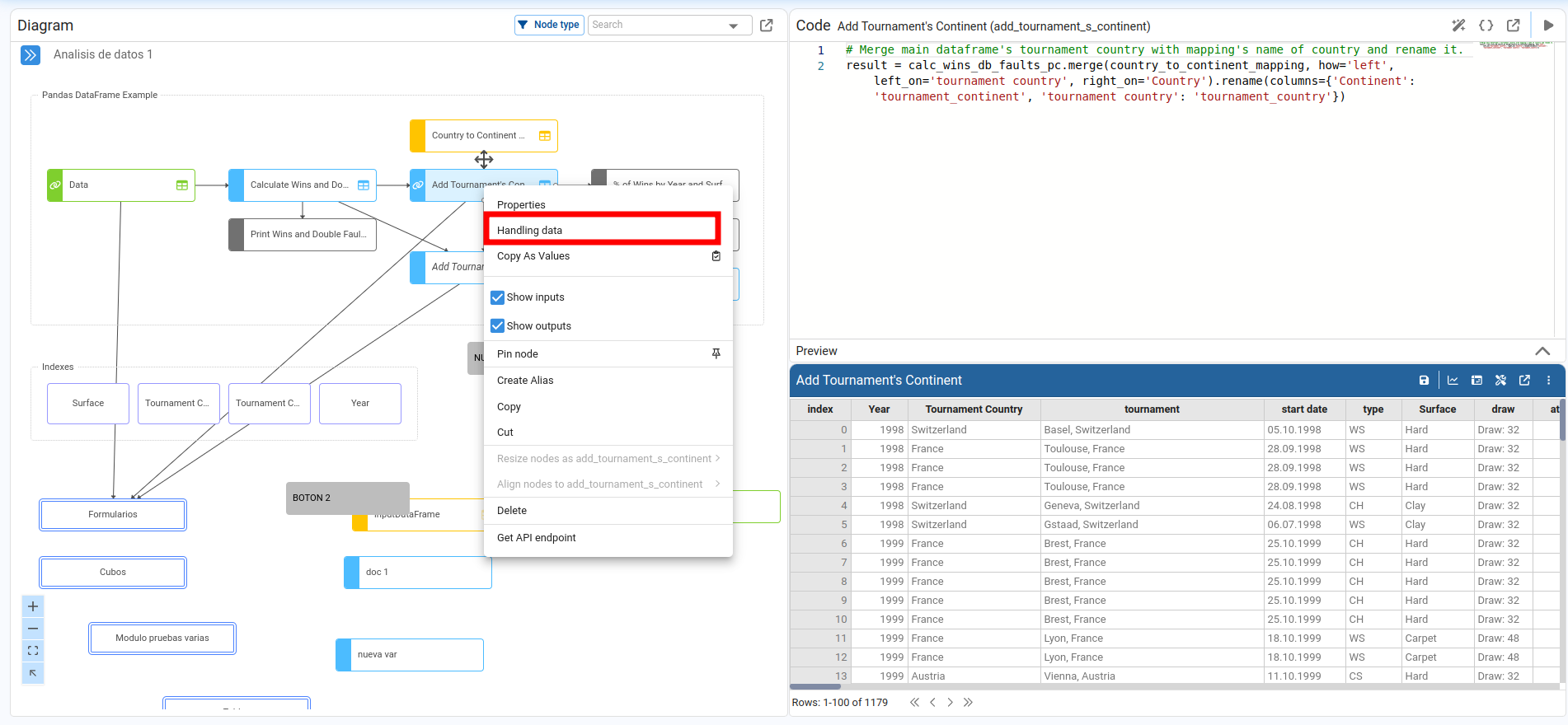

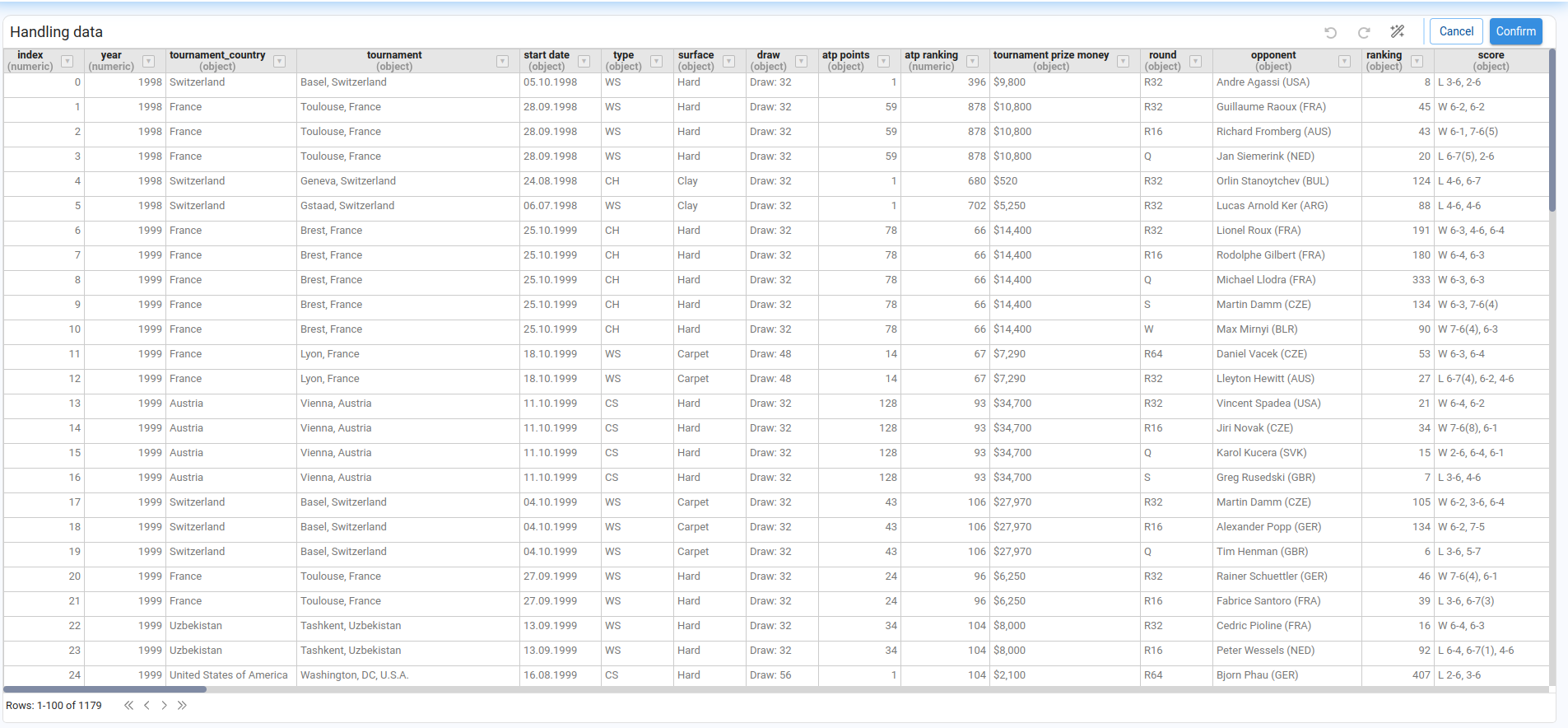

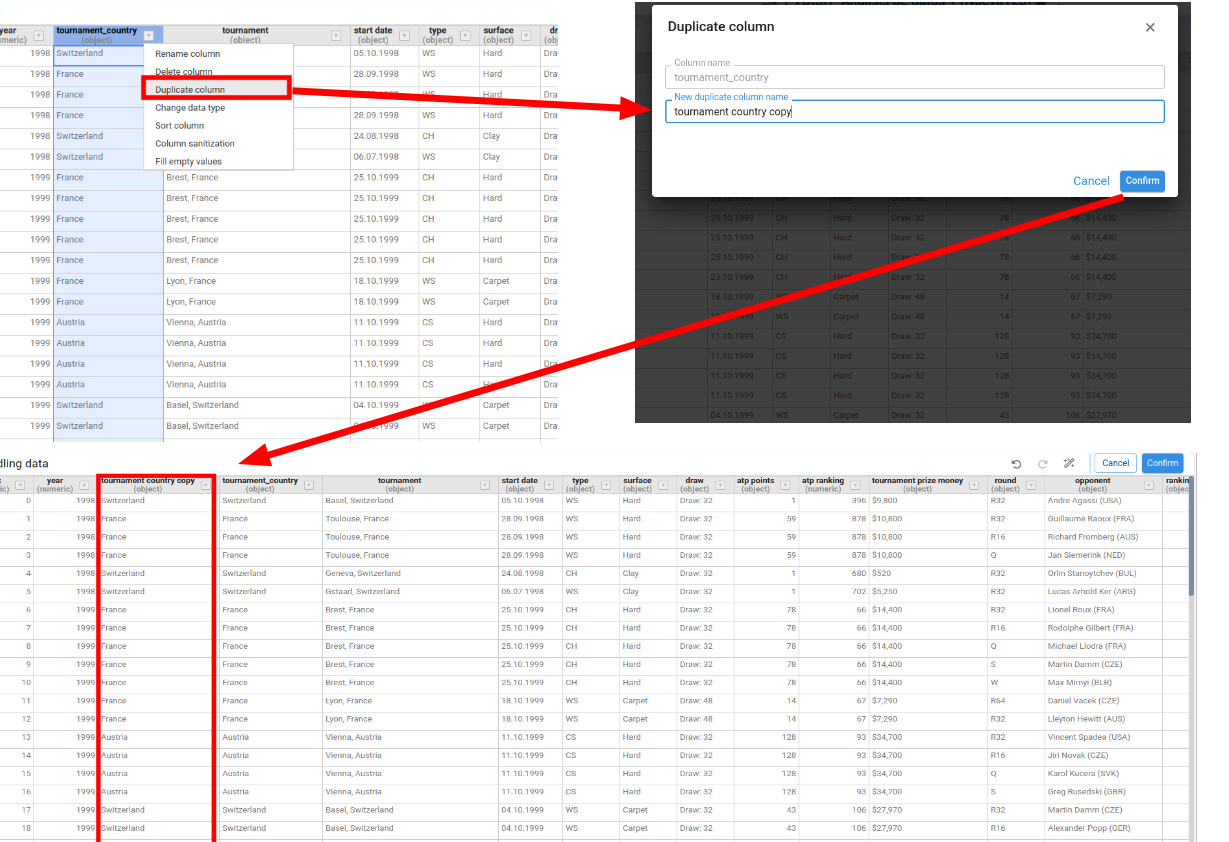

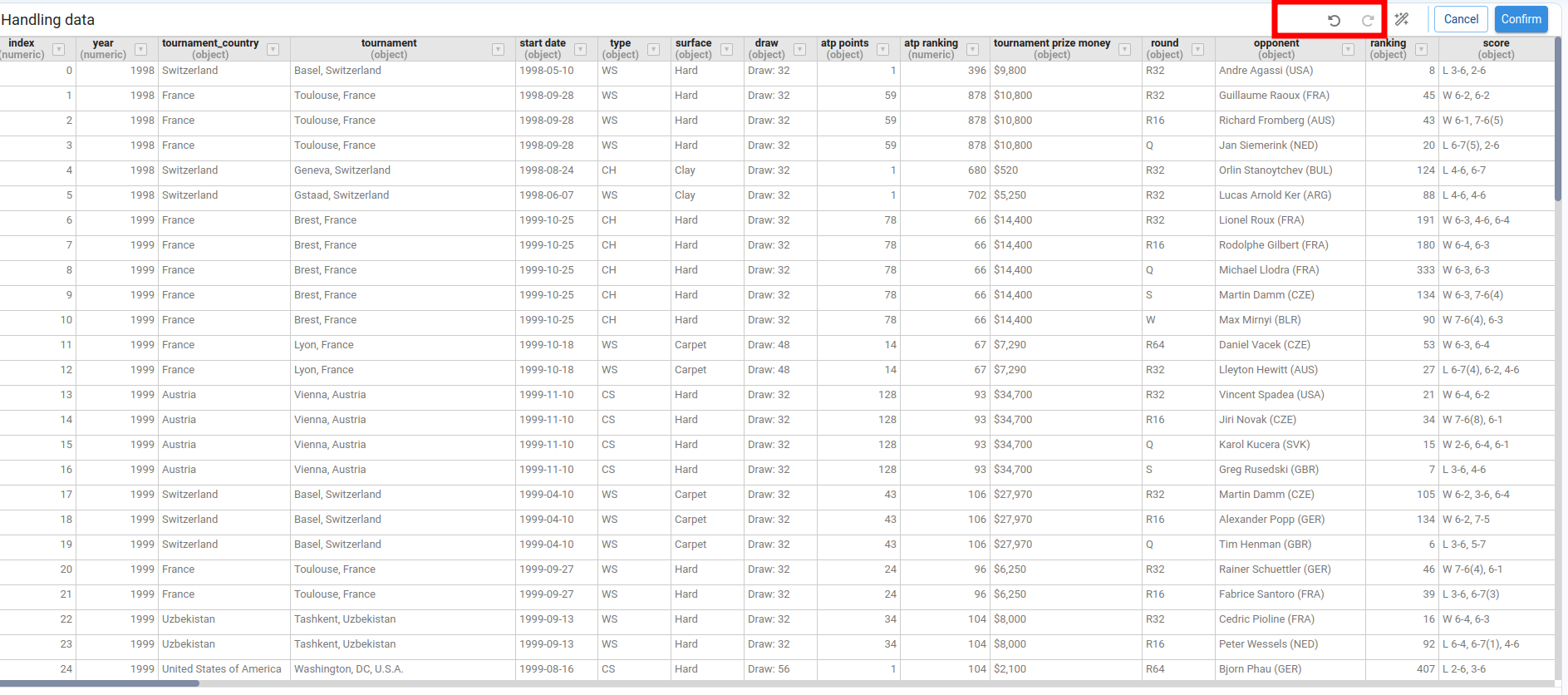

¶ Wizards to manipulate Dataframe nodes

Pyplan includes a set of wizards that help us transform pandas.DataFrame results without writing all the code manually.

To open these wizards, we first execute the node that returns the DataFrame. Then we:

- Open the node menu (right‑click or menu icon on the node).

- Select Handling data.

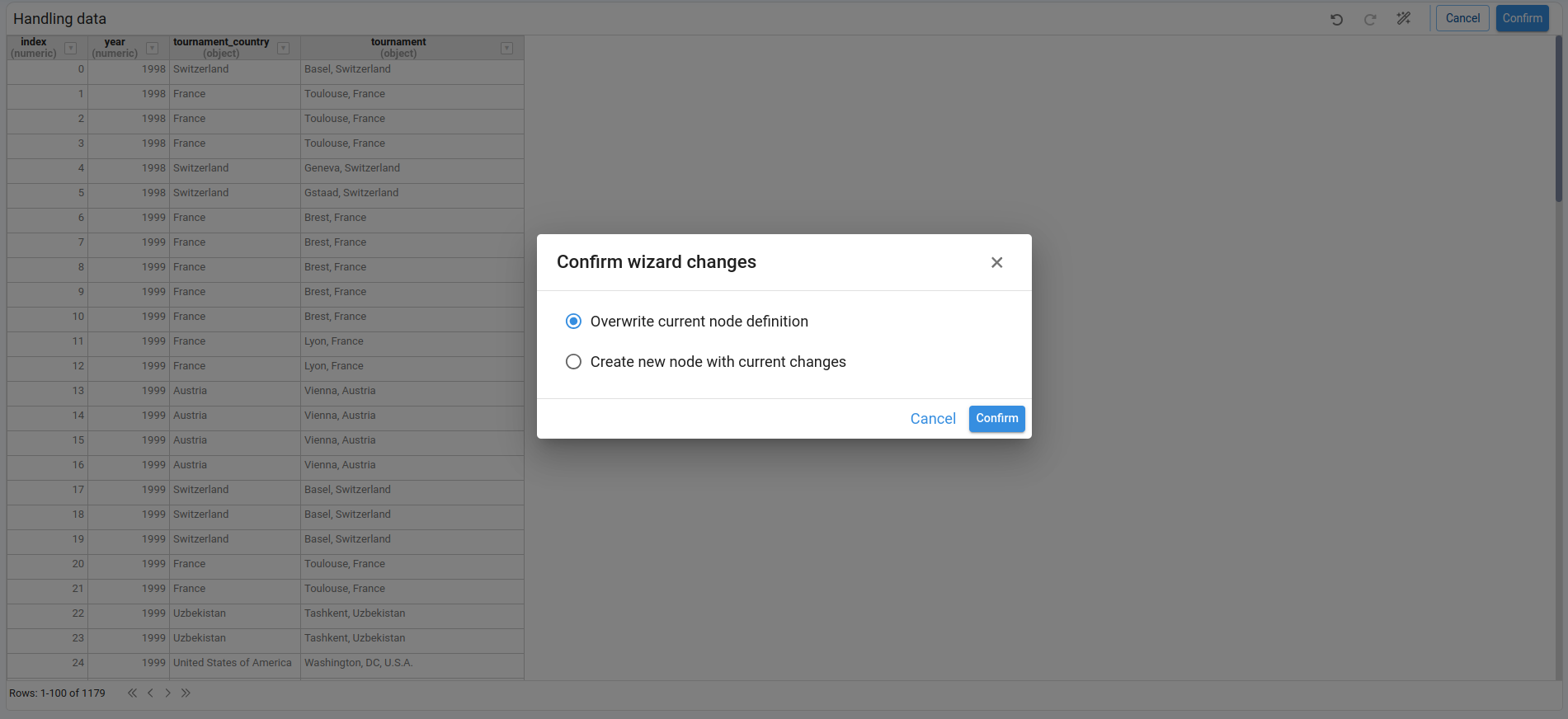

A new widget opens showing the node result in a paginated table. Column headers display both the column name and its data type.

The available wizards are grouped into two categories:

- Wizards that affect the entire DataFrame.

- Wizards that affect only a single column.

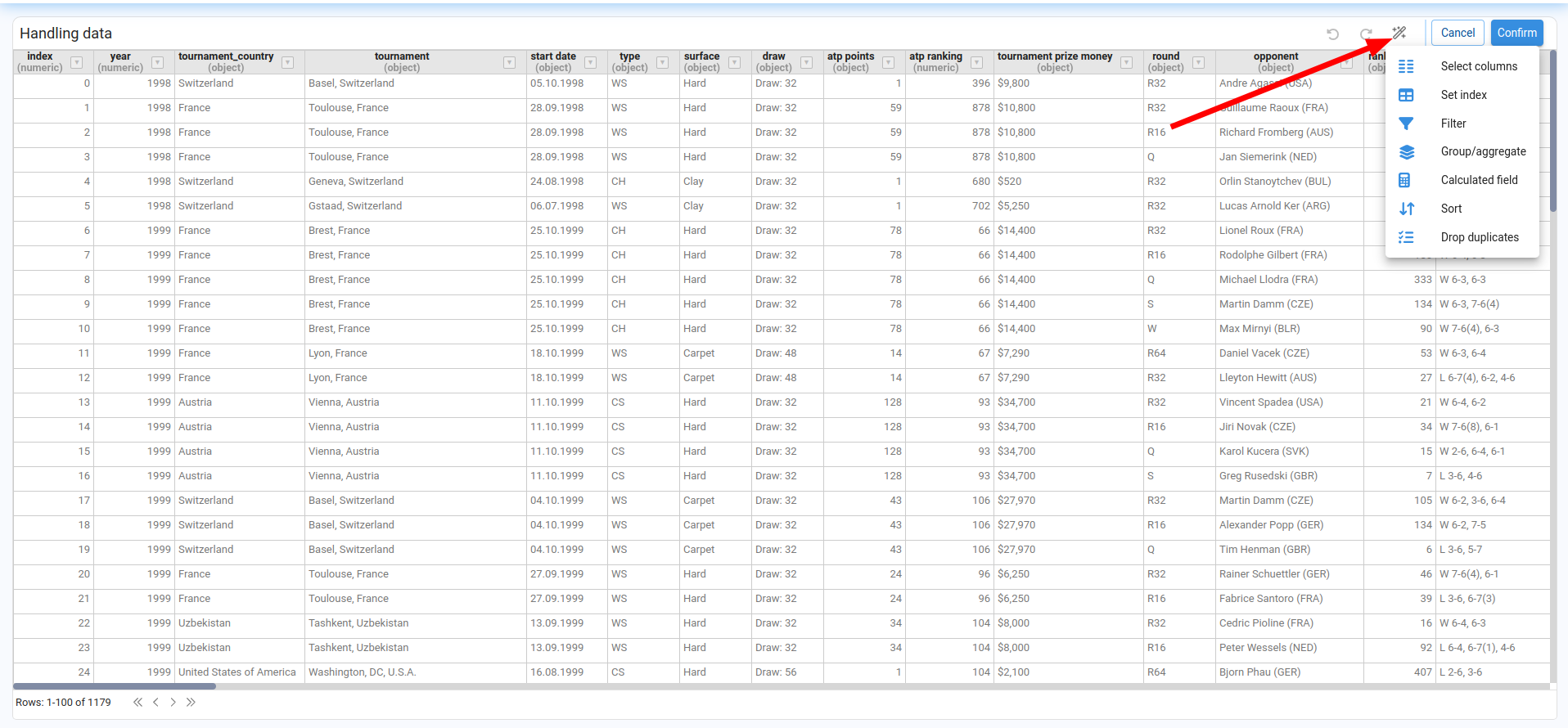

¶ Wizards that impact the entire dataframe

These wizards modify the table as a whole.

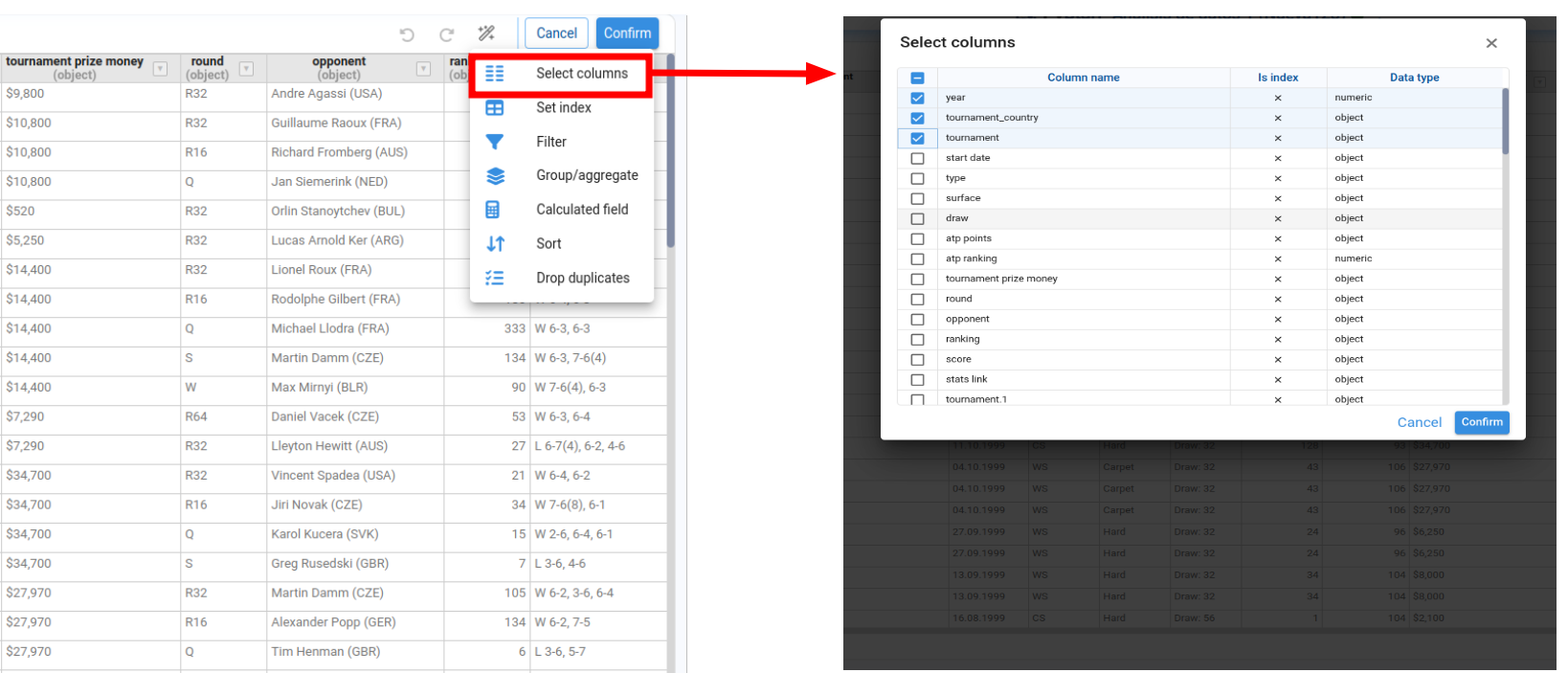

¶ Select columns

The Select columns wizard lists all columns in the DataFrame, together with their data type and an indicator showing whether each column is an index.

- We select the columns we want to keep.

- When we confirm, the widget preview updates to show only those columns.

Changes are not applied to the original node until we explicitly confirm:

- If we click Cancel, the widget closes and the node remains unchanged.

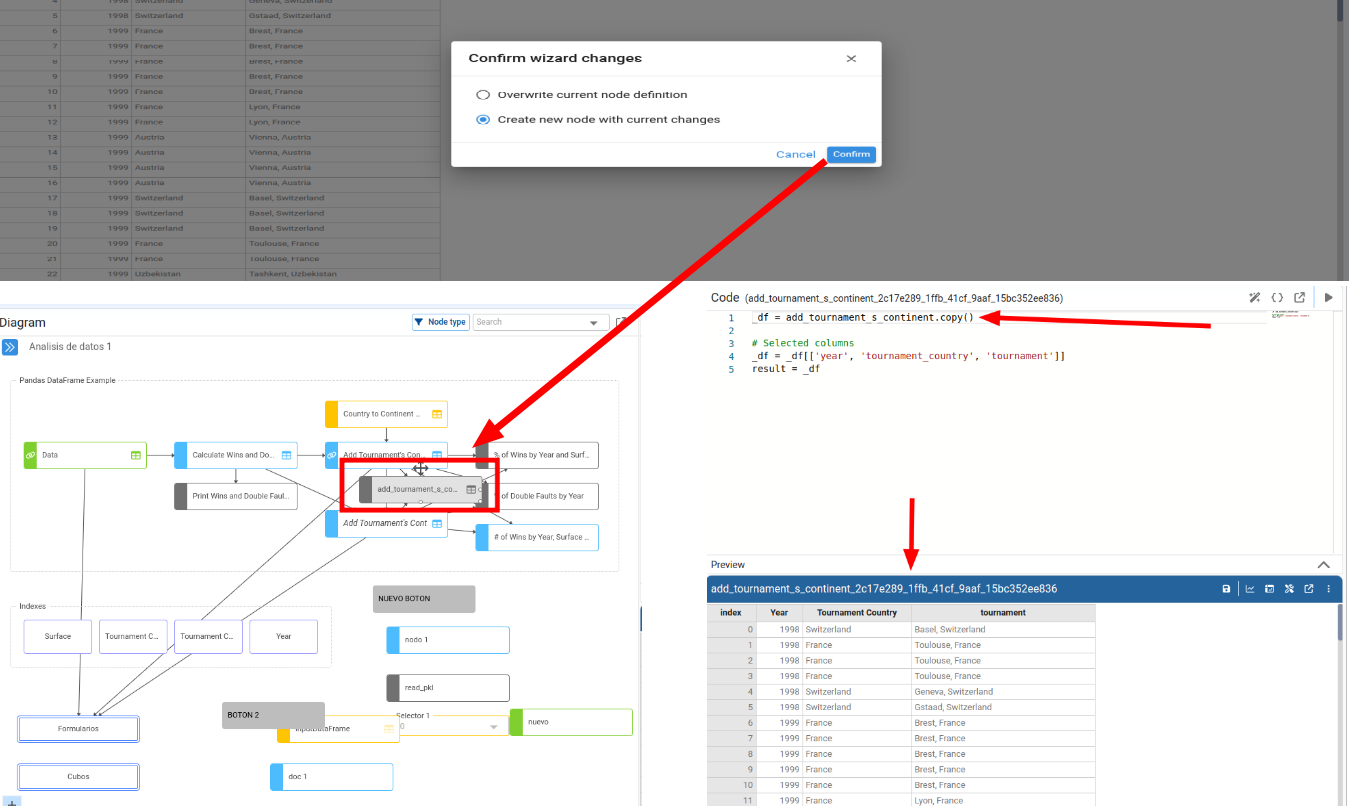

- If we confirm, we can choose to:

- Overwrite current node defintion, or

- Create a new node with current changes that uses the transformed DataFrame as its result.

When we apply the changes to the current node, the widget closes and the original node is re‑selected with the modified DataFrame.

When we choose to create a new node, the widget also closes but the new node is selected and executed, with its Definition automatically filled with the generated code.

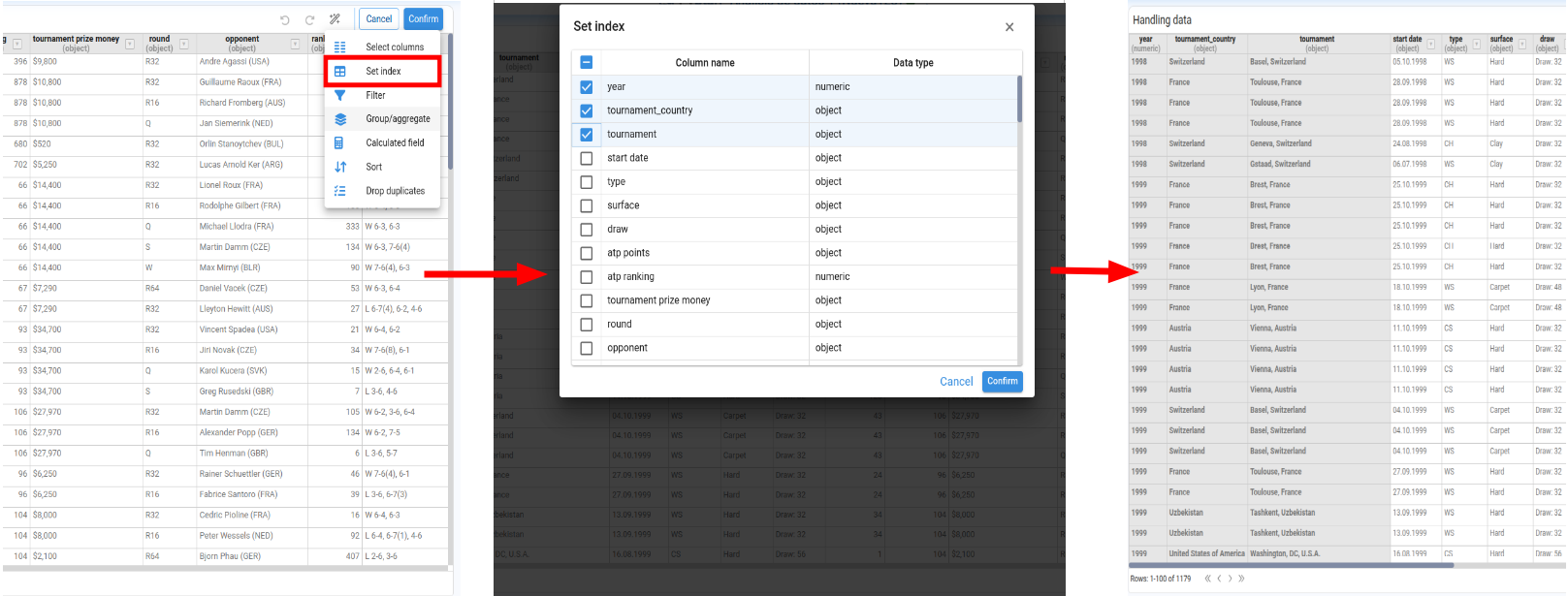

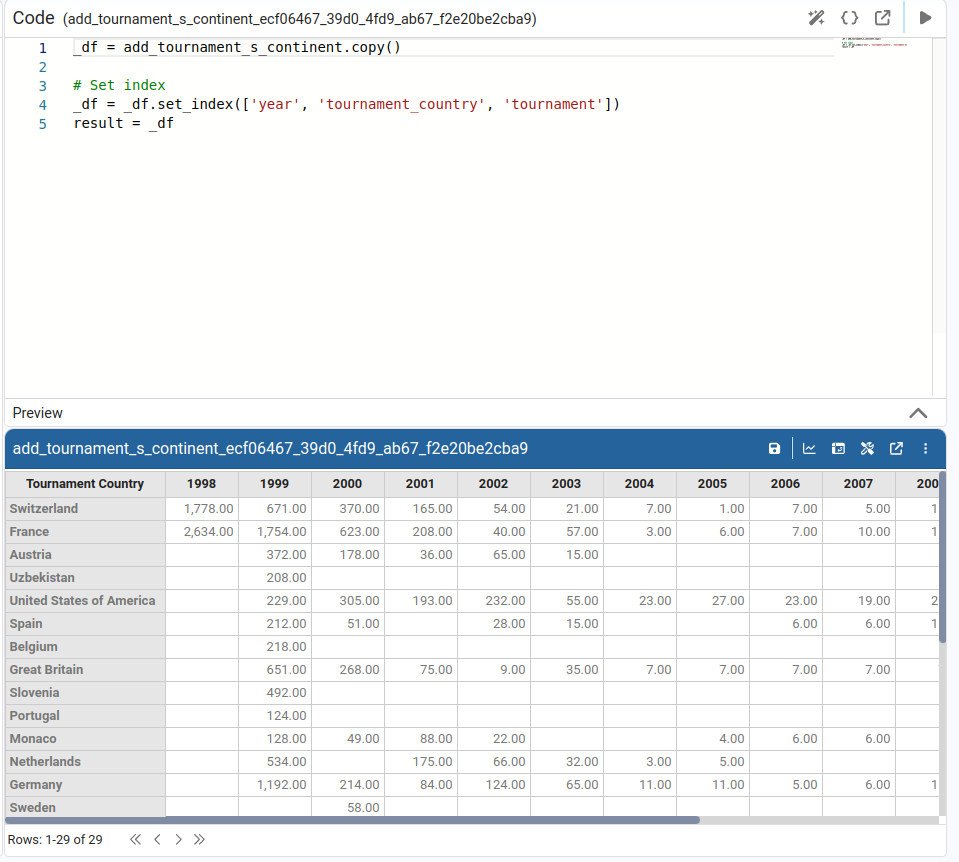

¶ Select index

The index selection wizard lets us choose one or more columns to act as indexes of the DataFrame.

- We select the columns to use as indexes.

- On confirmation, the widget shows the DataFrame with the new index configuration, and the Definition code is updated accordingly (for example, using

set_index).

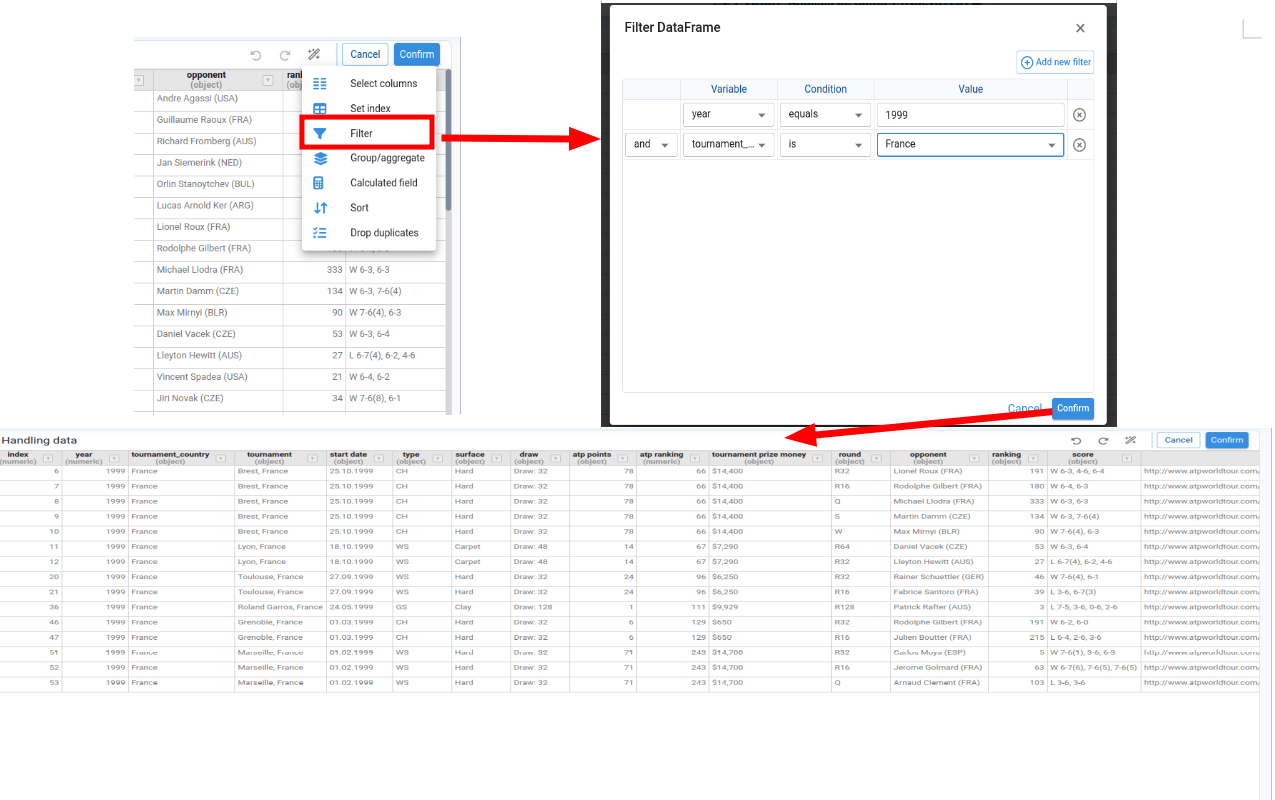

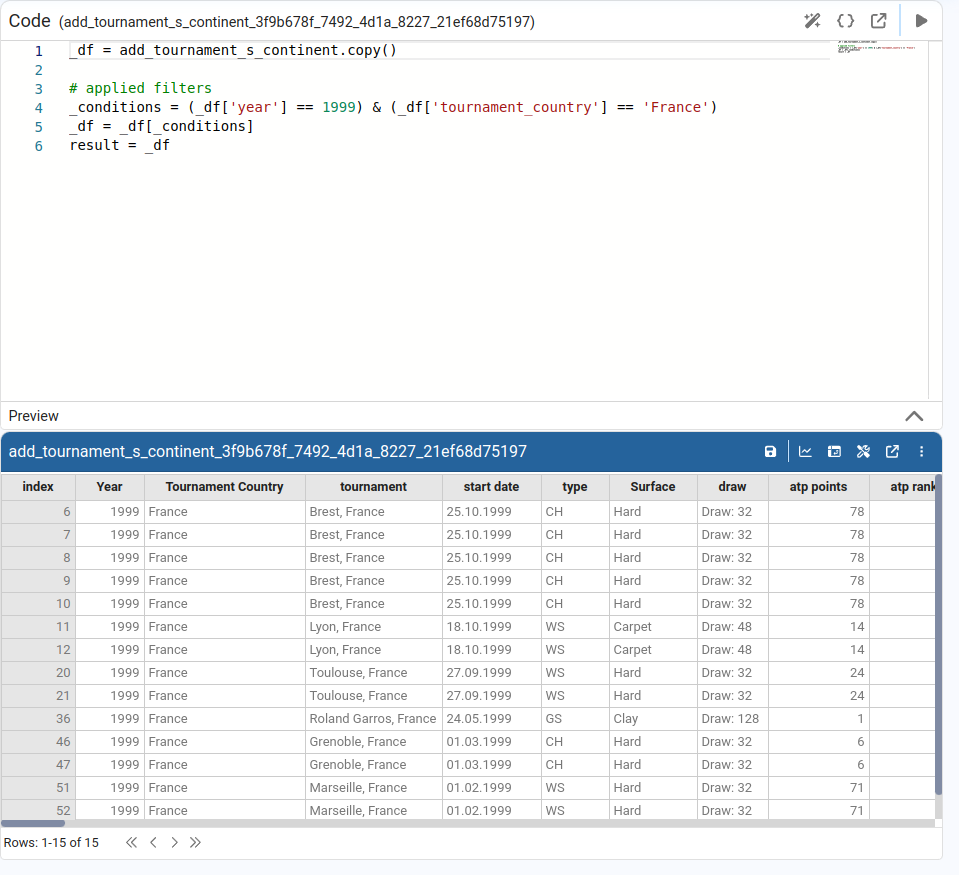

¶ Filter rows

The filtering wizard allows us to apply one or more filters to the DataFrame.

- Each row in the wizard represents a filter condition on a column (column, operator, value).

- Filters are combined using AND or OR, according to the operator we choose.

When we confirm, the wizard generates the corresponding Pandas code (for example, using boolean masks or query) and updates the DataFrame preview.

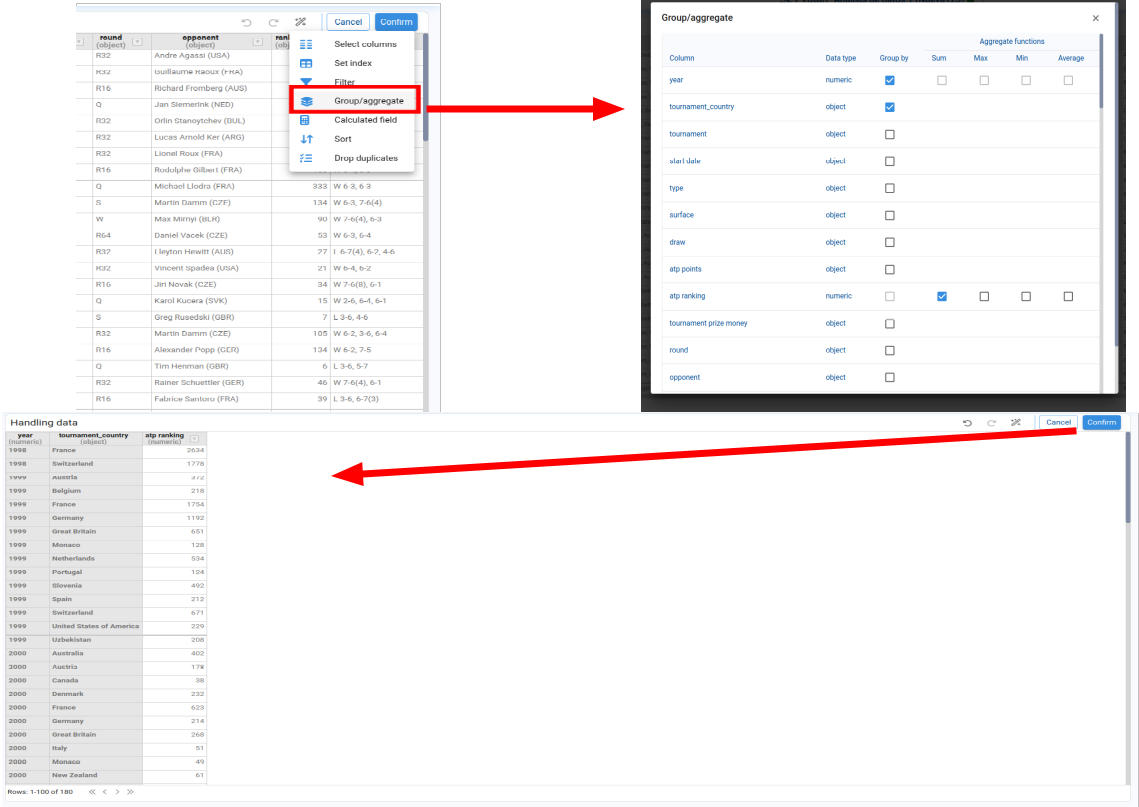

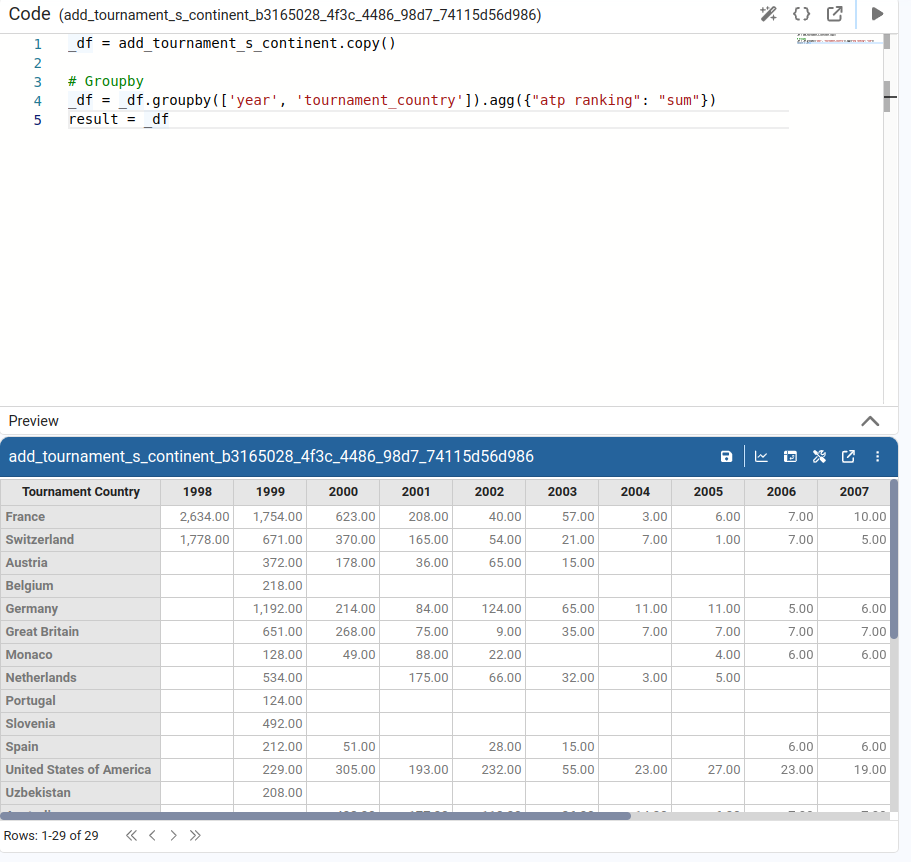

¶ Group / Aggregate

The group/aggregate wizard is used to perform aggregations.

- It lists all columns, indicating which can be used for aggregation (numeric columns) and which can only be used for grouping.

- We choose the grouping columns and the aggregation functions (sum, mean, count, etc.) for the numeric columns.

On confirmation, Pyplan builds the group/aggregate expression (for example, groupby(...).agg(...)) and updates the node’s Definition and result.

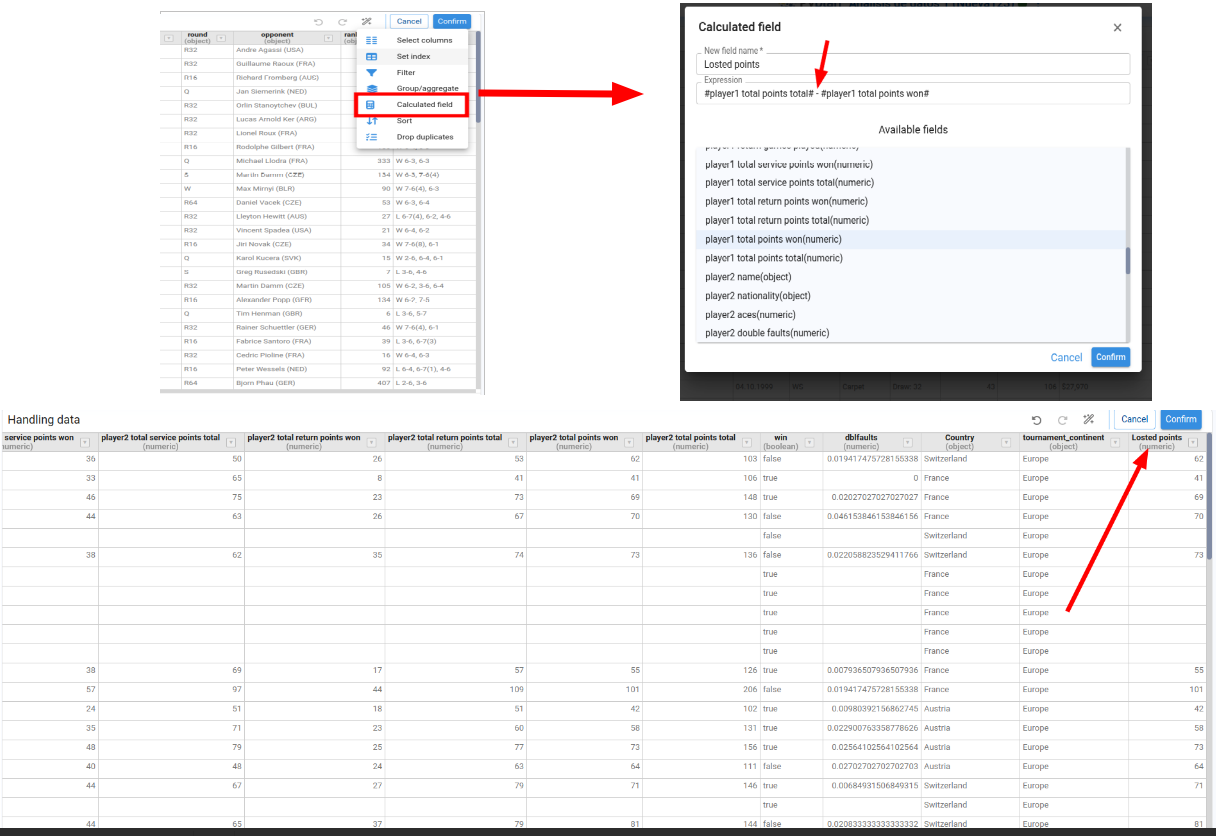

¶ Add calculated field

This wizard lets us add a new calculated column to the DataFrame.

- We specify the new column name.

- We define the calculation for each row, typically as an expression combining existing columns.

For example, we can create a Total lost points column as:

player1 total points total – player1 total points won.

The new column is appended at the end of the table, and the wizard generates the necessary code (for example, _df['lost_points'] = ...).

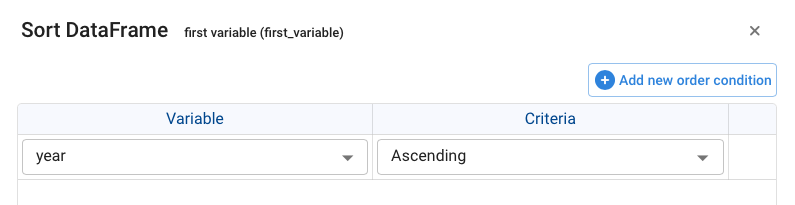

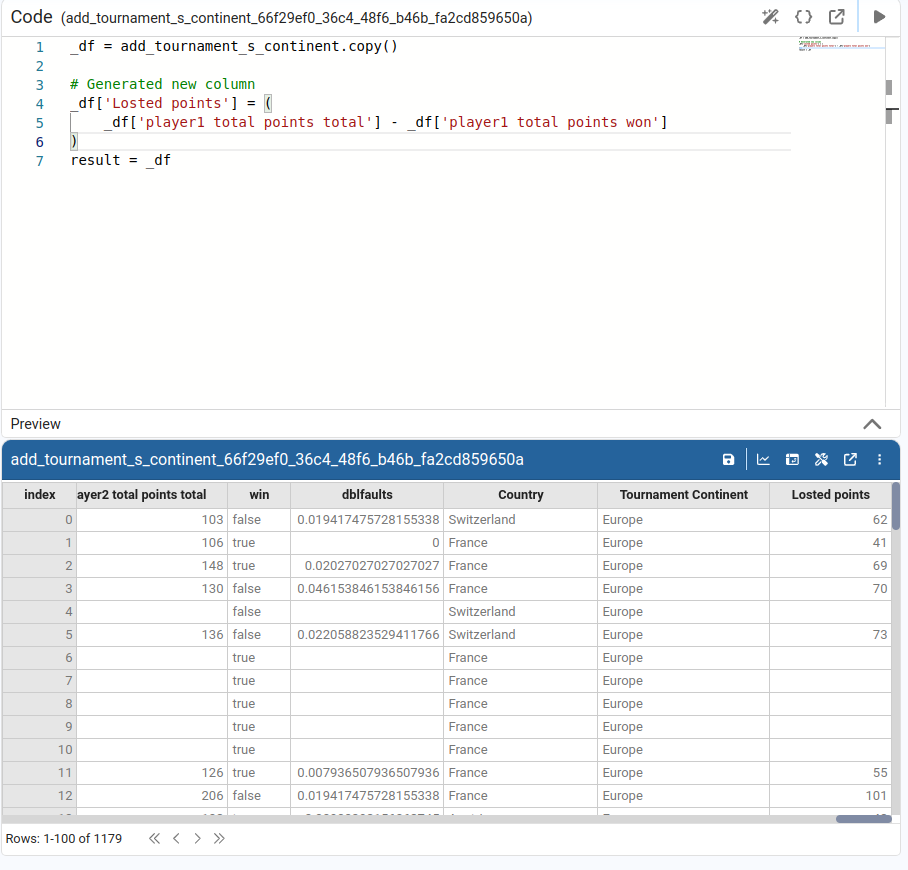

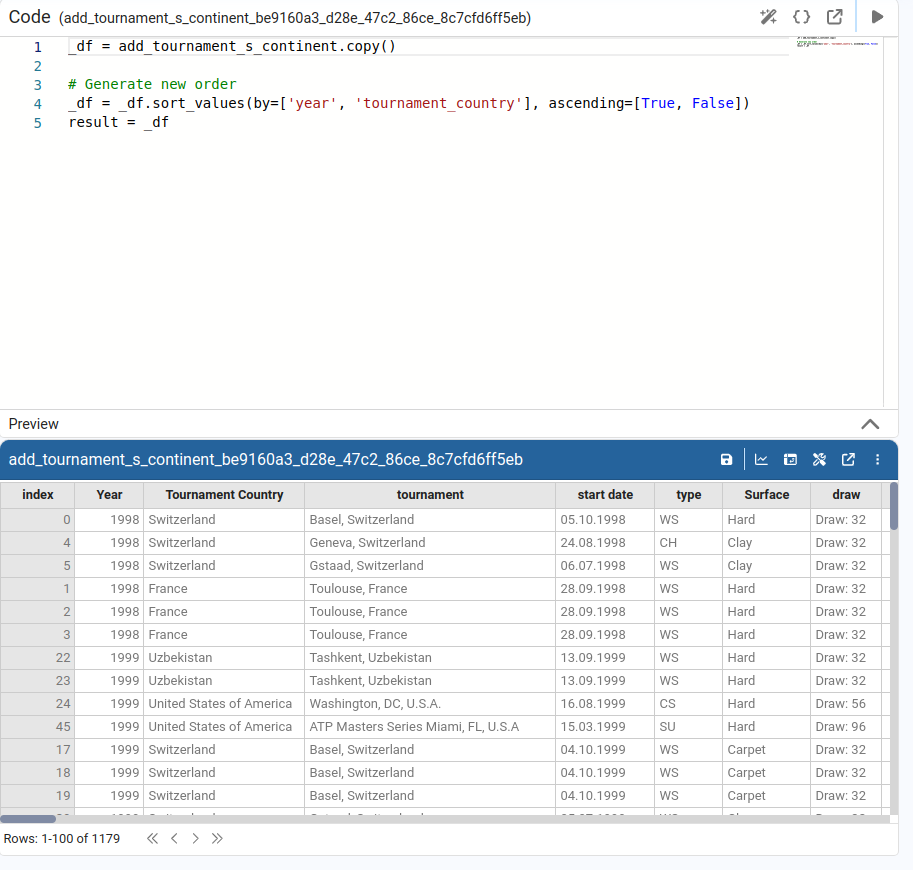

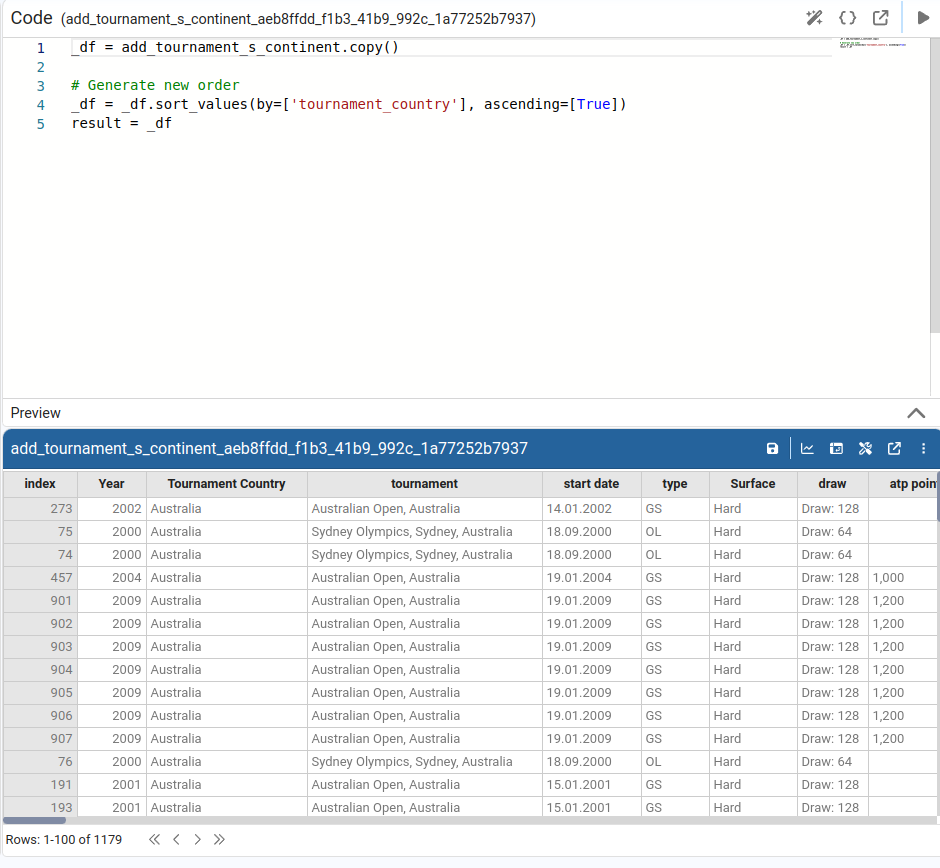

¶ Sort DataFrame

The sort DataFrame wizard allows us to sort the table by one or more columns.

- We select the columns to sort by and choose ascending or descending order for each one.

The generated code uses the appropriate Pandas sort function (for example, sort_values) and updates the preview.

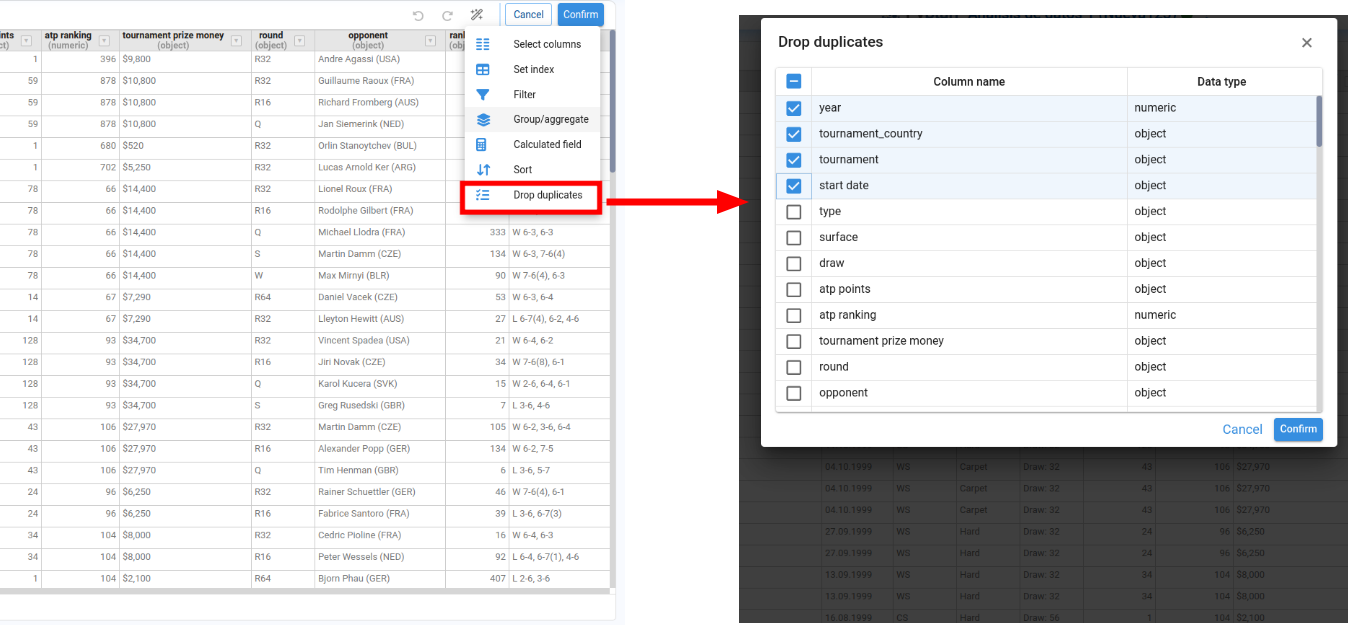

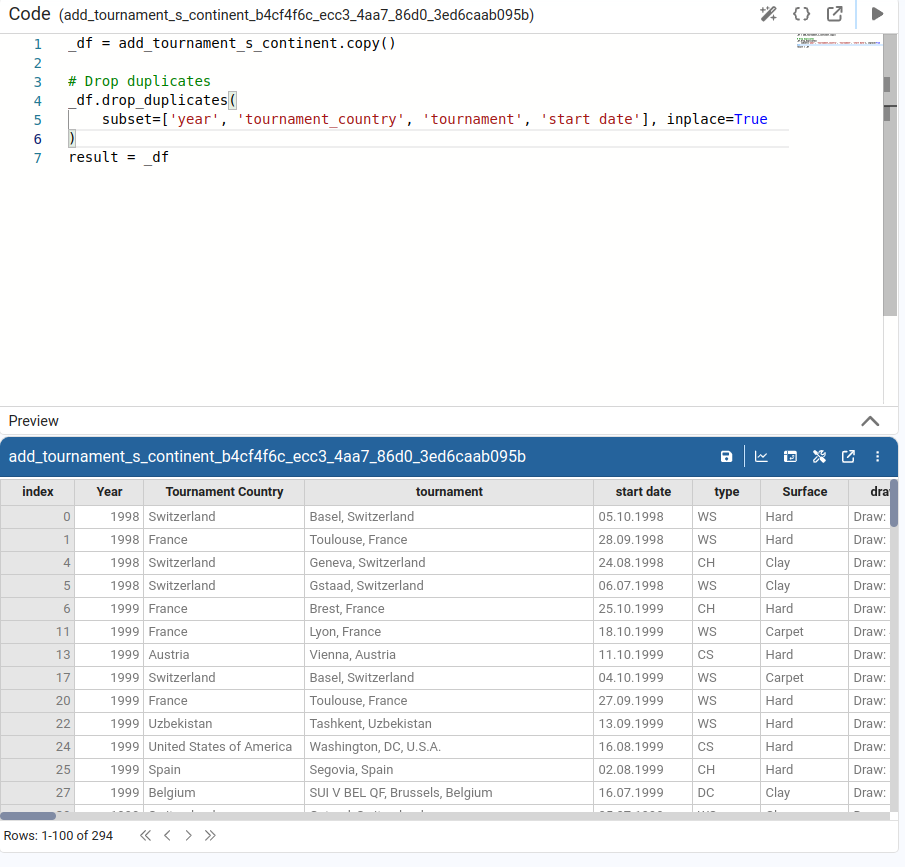

The last wizard in this group is drop duplicates.

- It lists all columns and lets us choose the subset of columns to consider when removing duplicate rows.

On confirmation, Pyplan calls drop_duplicates on the DataFrame and updates both the preview and the Definition.

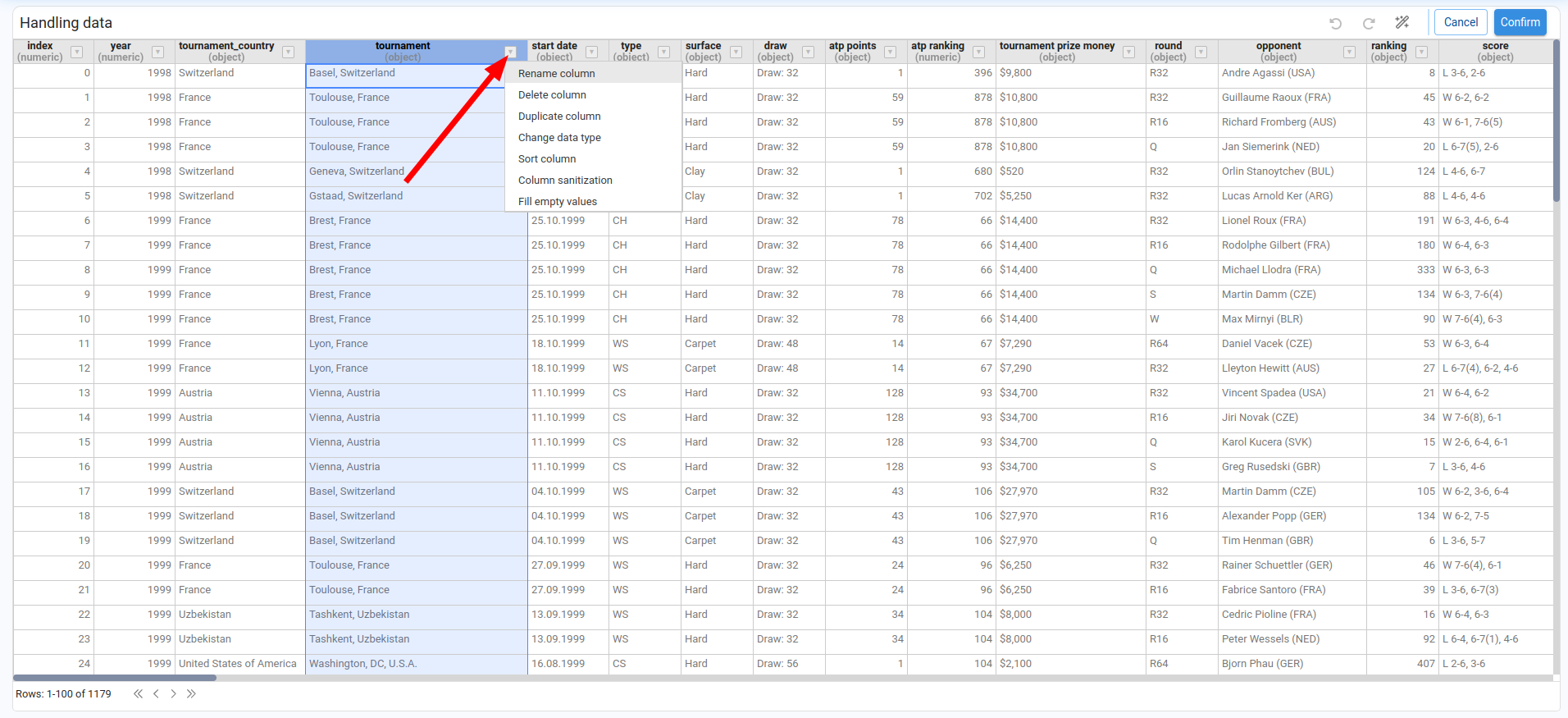

¶ Wizards that impact at the column level

These wizards operate only on the selected column; they do not modify data in other columns. They are available from the table header of each column.

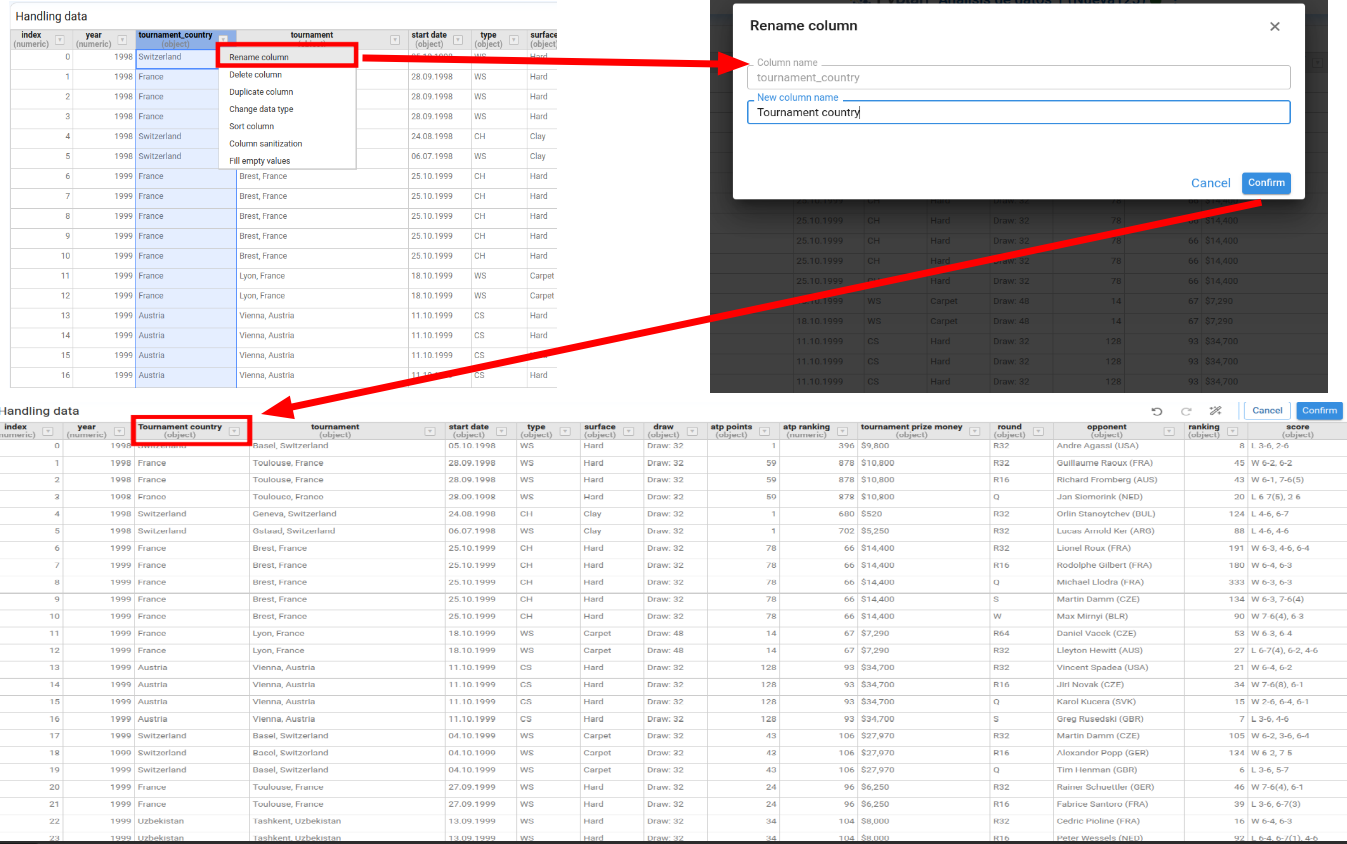

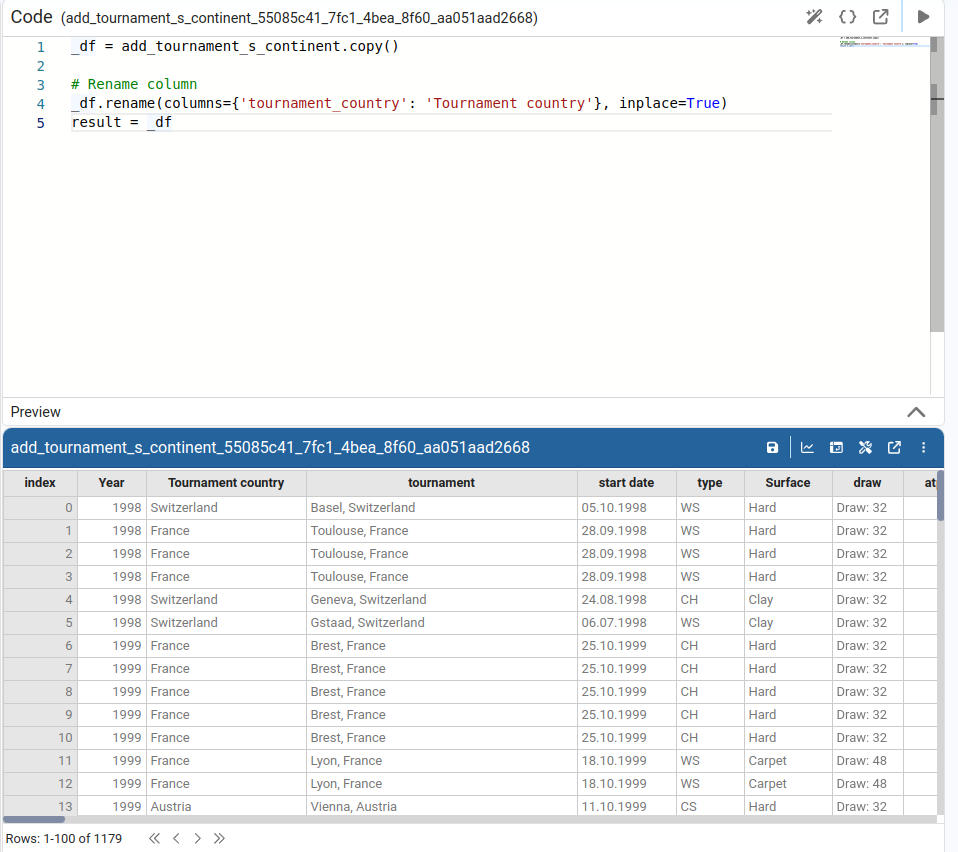

¶ Rename column

The rename column wizard lets us enter a new name for the selected column.

- We type the new name and confirm.

- The wizard updates the column header and the node Definition accordingly (for example, using

renameor direct assignment).

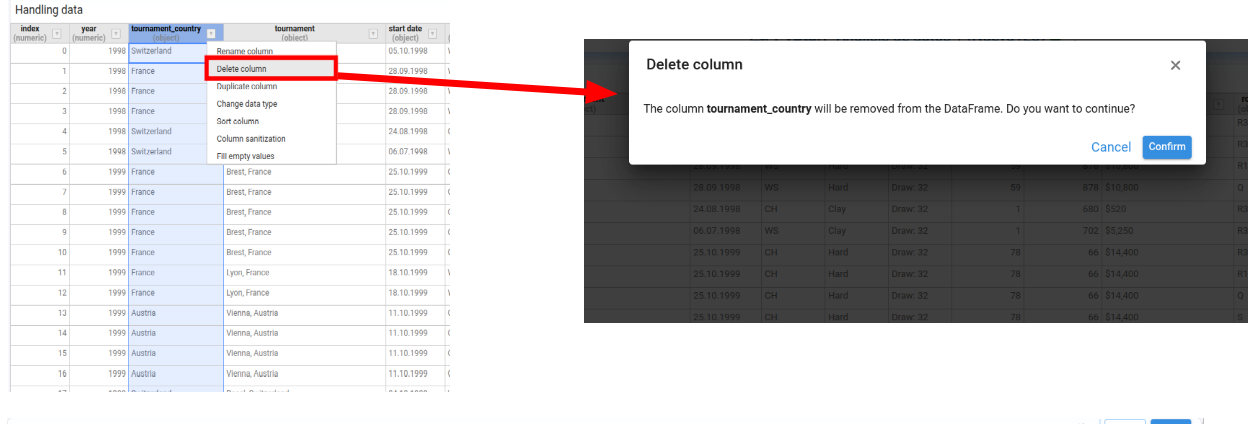

¶ Delete column

The delete column wizard removes the selected column from the DataFrame.

- We confirm the action and the column is deleted from the table.

- The generated code usually uses

drop.

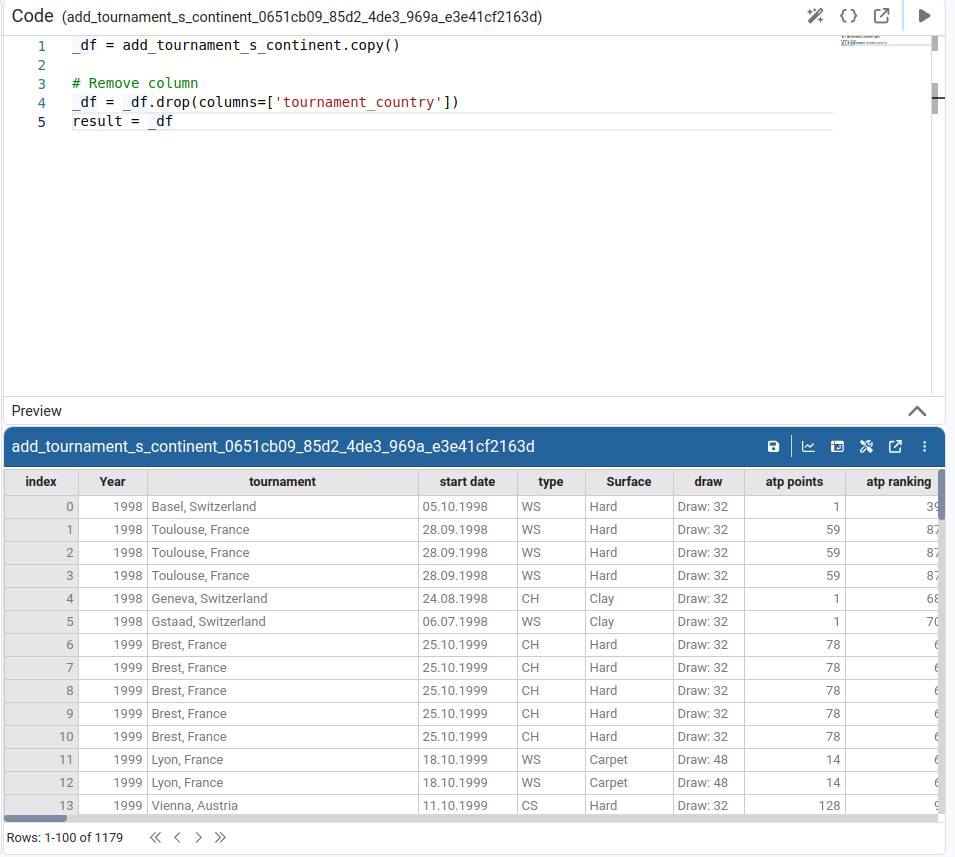

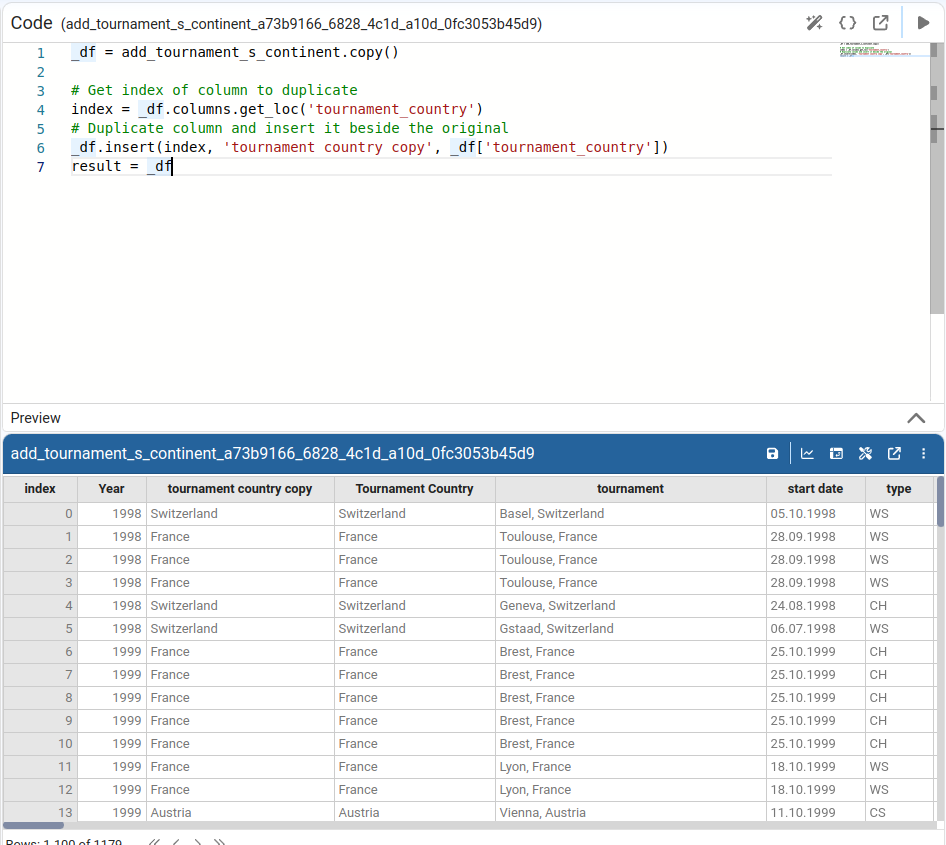

¶ Duplicate column

The duplicate column wizard creates a copy of the selected column with a new name.

- We provide the name for the new column.

- The wizard generates code that copies the values (for example,

_df['new_name'] = _df['old_name']).

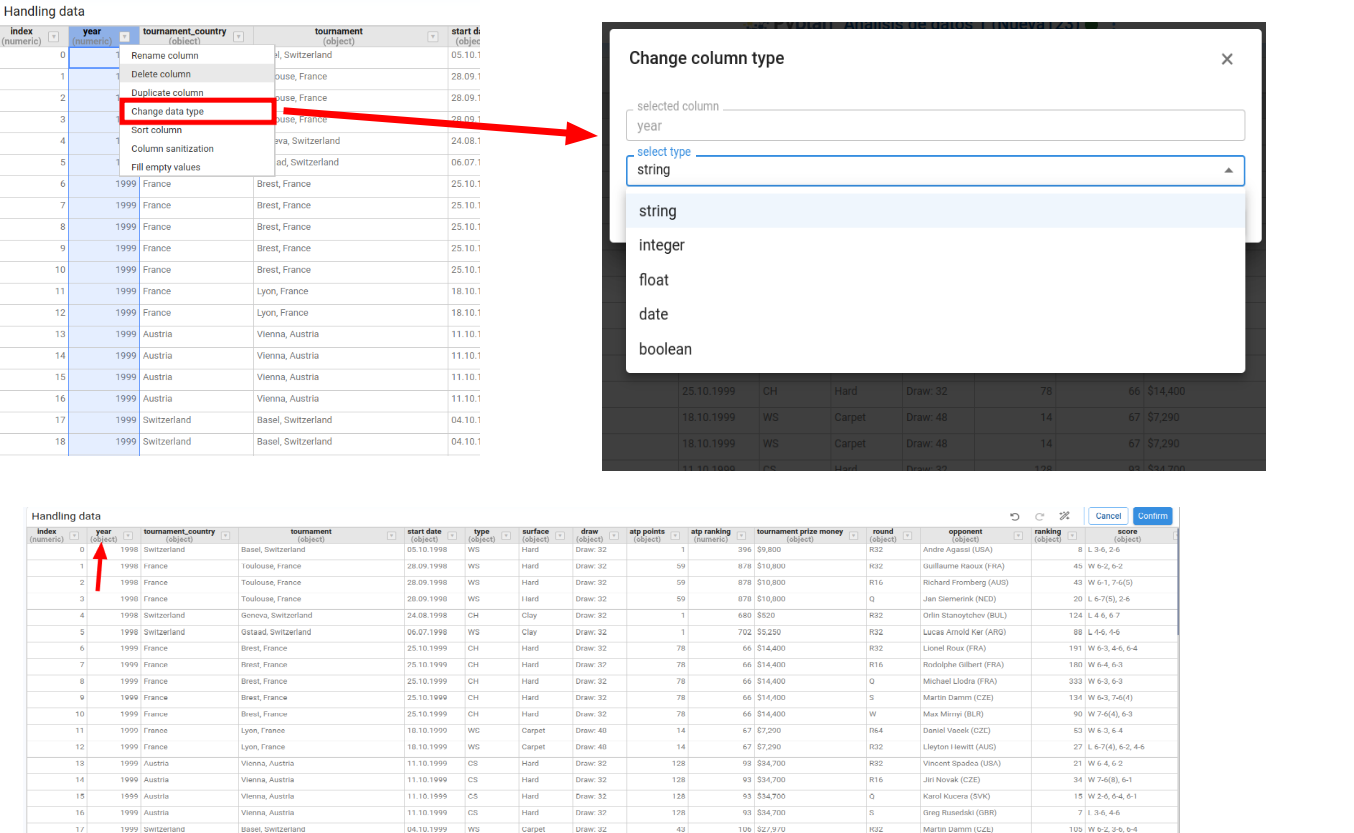

This wizard changes the data type of the selected column. The current type is shown under the column title.

Available target types are:

stringintegerfloatdateboolean

When we choose date, we can also specify a date format for parsing or display.

The generated code uses the appropriate conversion functions (for example, astype, to_datetime).

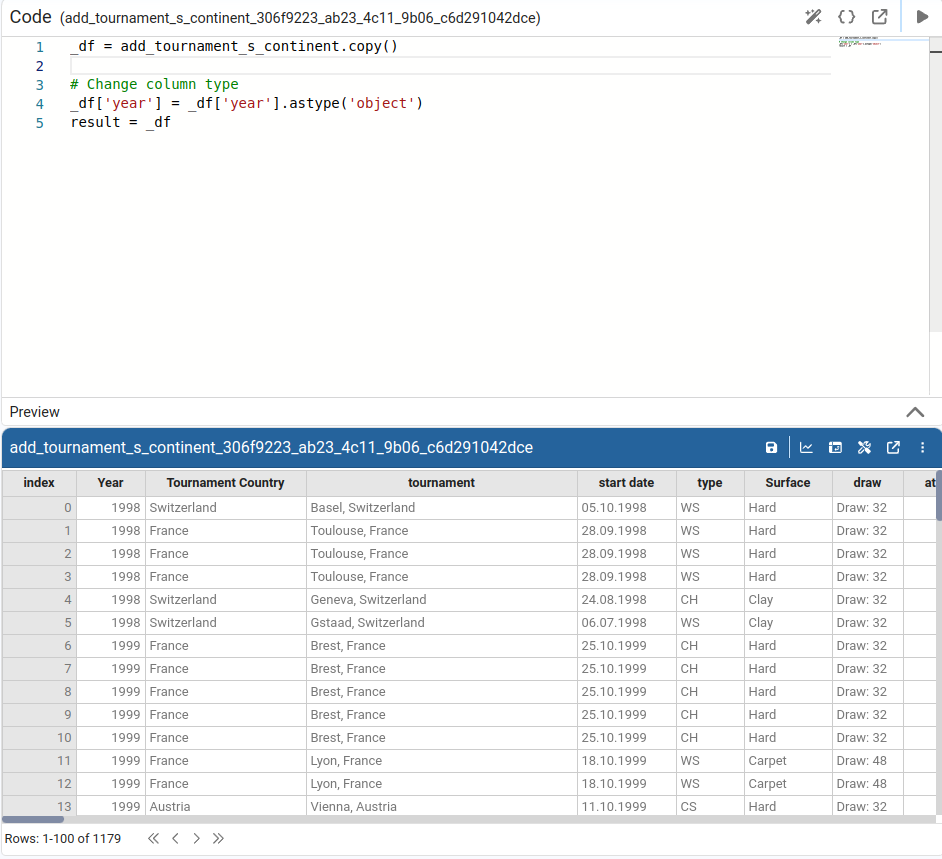

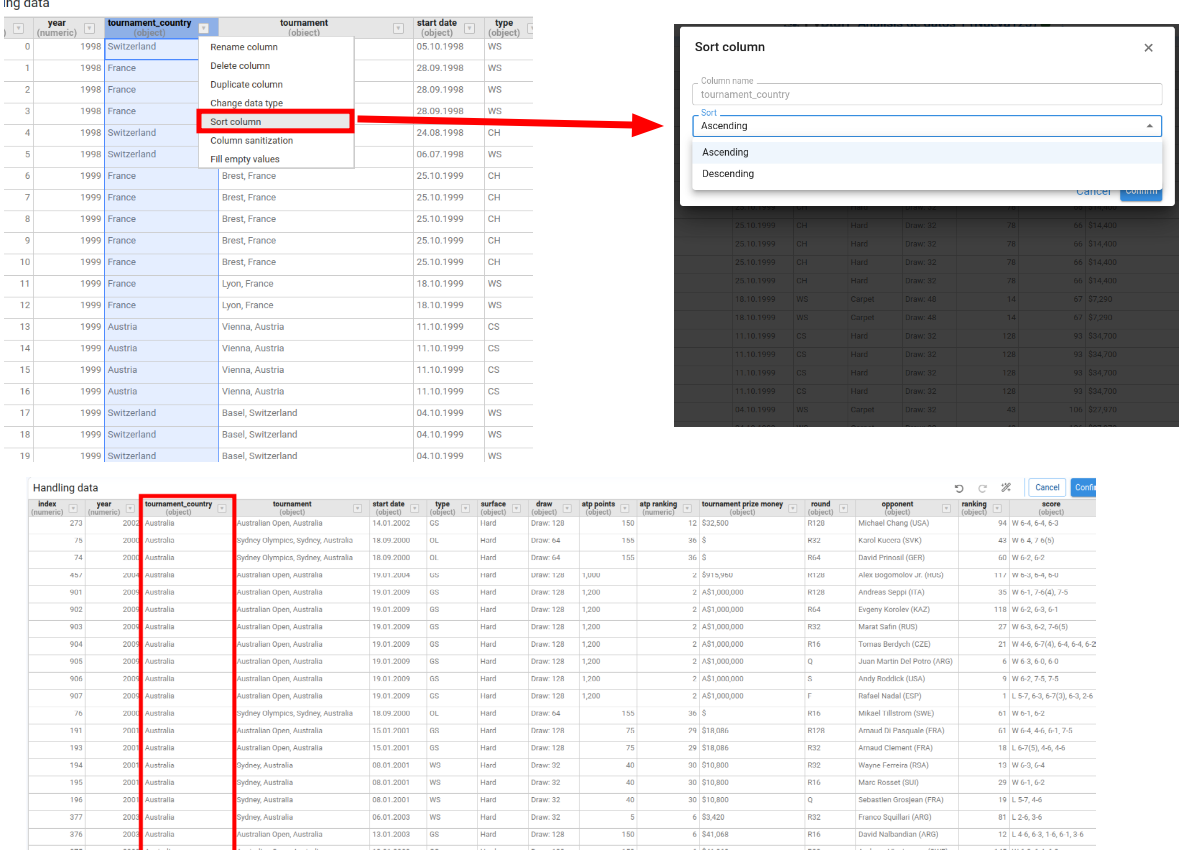

¶ Sort column

The sort column wizard is a simplified sort that only changes the order of the selected column (ascending or descending) and keeps the rest of the DataFrame aligned. Internally it typically uses sort_values on that column.

Generated code by the sort column wizard:

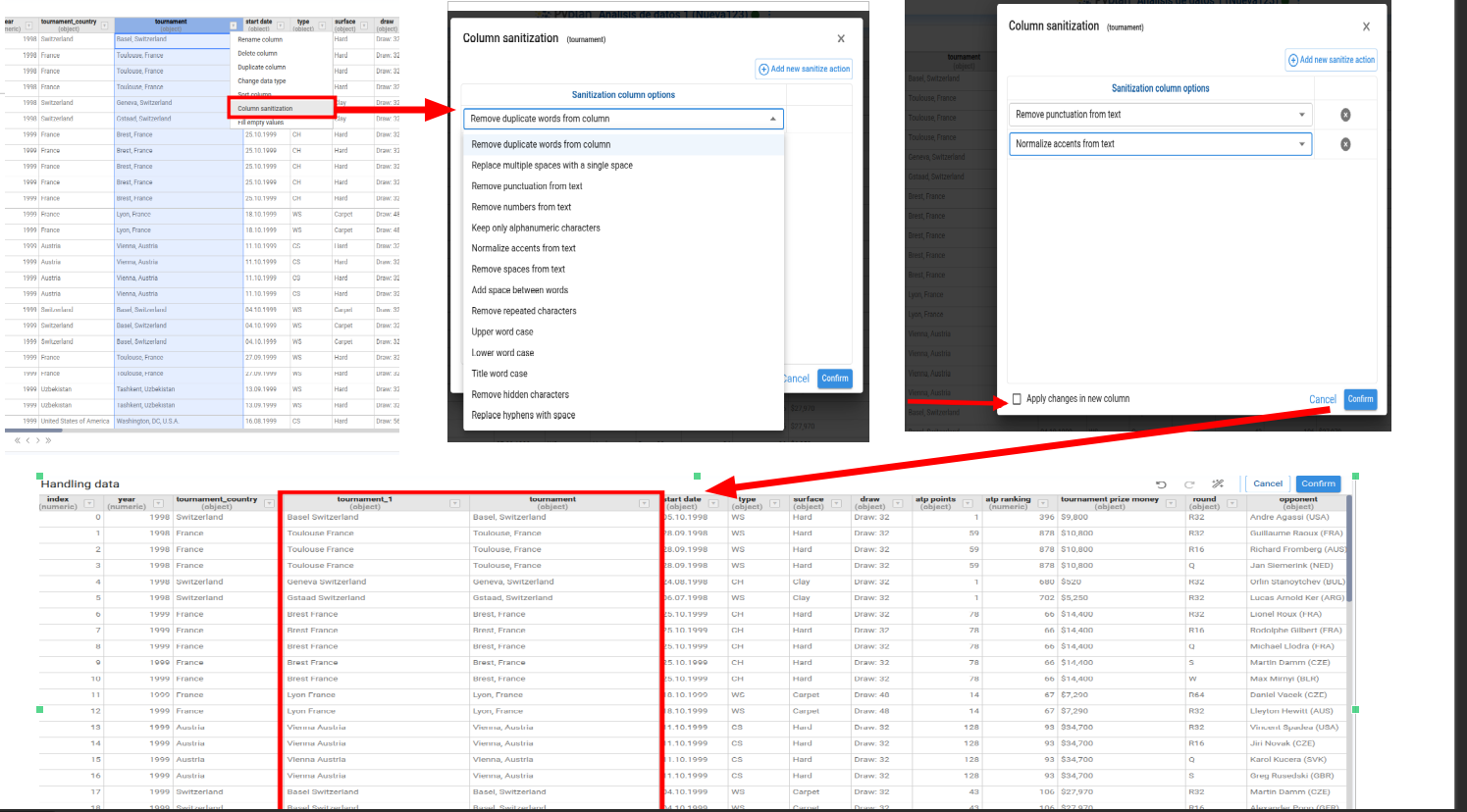

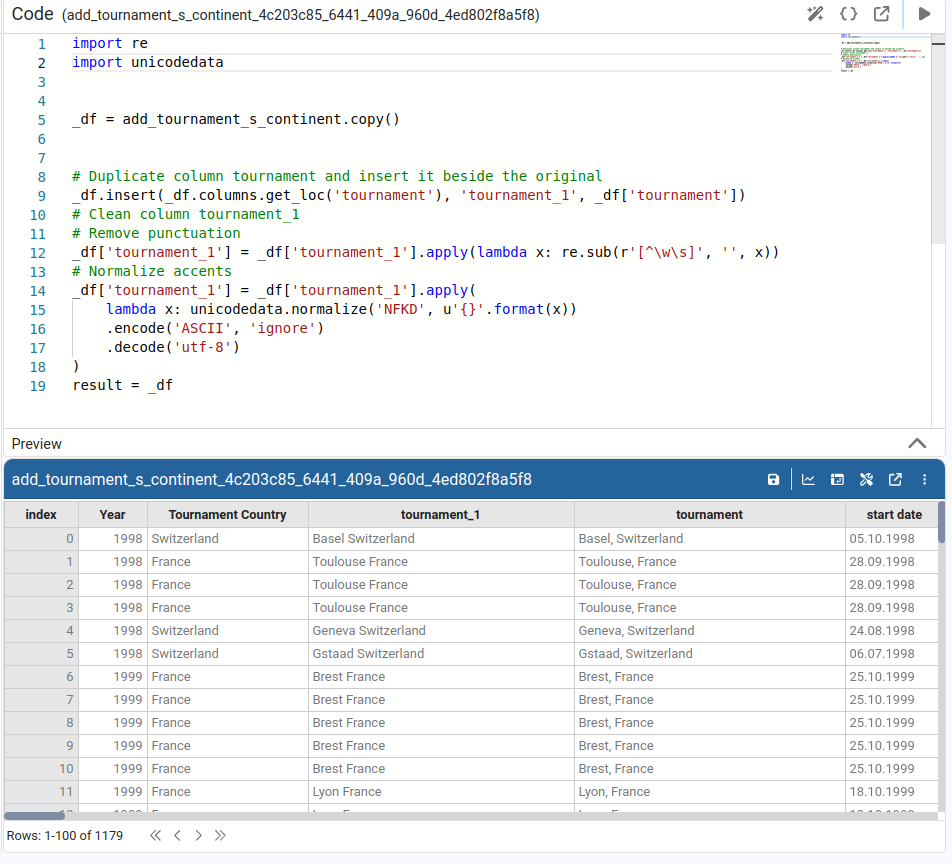

¶ Sanitize column

The column sanitization wizard applies one or more clean‑up operations to the entire column (for example, trimming spaces, changing case, replacing characters).

- We pick the actions from the list.

- We can choose whether to apply the changes in a new column or overwrite the current column using the checkbox at the bottom of the wizard.

The wizard generates the transformation code and applies it to the selected column.

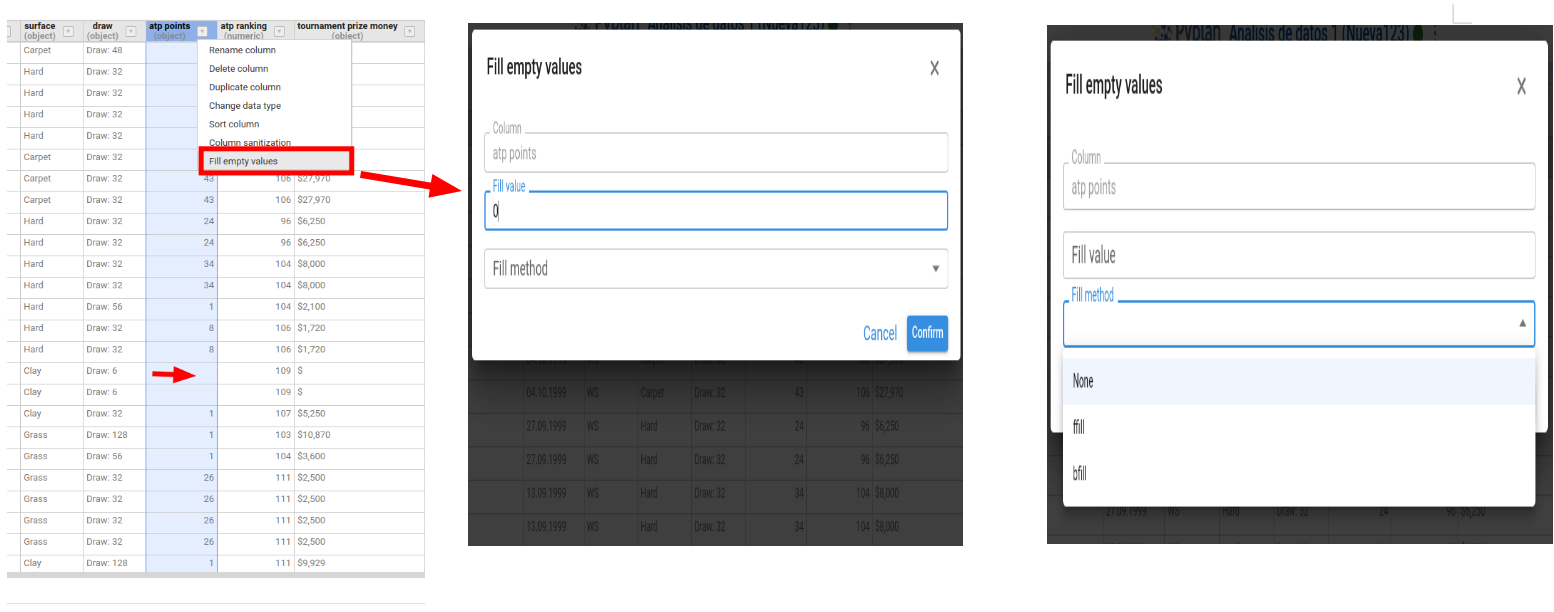

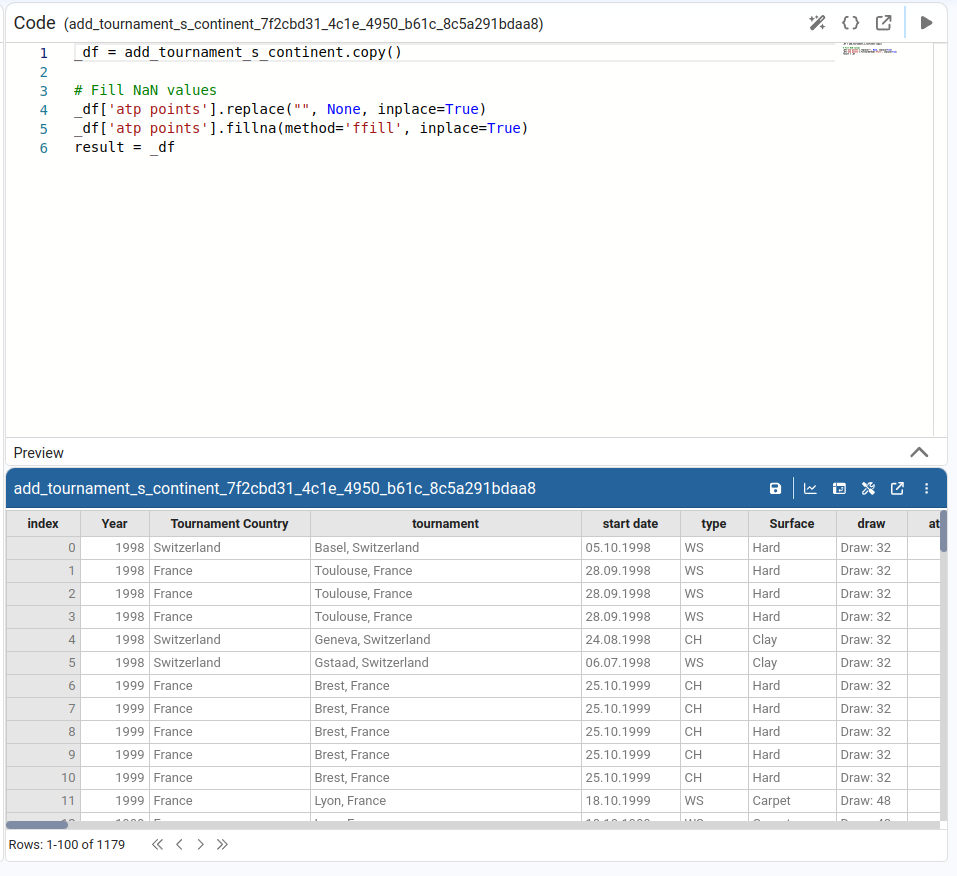

¶ Fill empty values

The fill empty values wizard fills missing or empty values in the column with a default value.

There are several options:

- Fill value – we type a literal replacement value (for example,

0,'N/A'). - Forward fill (

ffill) – for eachNaN, use the last non‑null value above it in the column. - Backward fill (

bfill) – for eachNaN, use the next non‑null value below it.

These methods can be combined: we can specify a literal fill value and also apply ffill or bfill as needed. The wizard generates code using fillna and/or ffill/bfill.

Generated code by the fill empty values wizard:

¶ Additional Features

Whenever we confirm a wizard and the table content changes, Pyplan keeps an internal history of changes. This allows us to:

- Undo a transformation.

- Redo it again if needed.

In addition, there is a built‑in (non‑wizard) feature to reorder columns:

- We select one or more columns and drag them to the desired position in the table.

- This reordering is immediately reflected in the node Definition and does not require any extra confirmation.